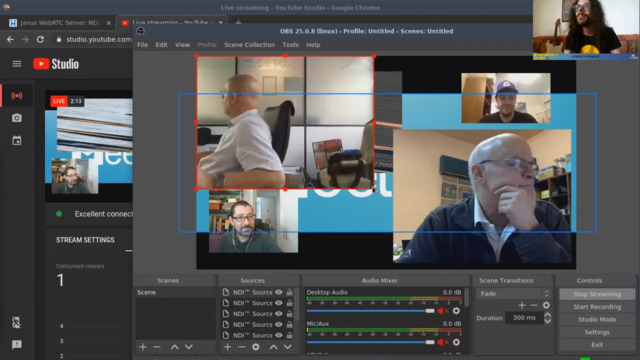

You may remember that a couple of months ago I wrote a blog post on a cool experiment I had worked on: a “simple” way to bridge the gap between the WebRTC and NDI worlds, something that had apparently been lacking in the broadcasters industry, using a new Janus plugin. At the time, this sparkled a lot of interest in the broadcasters community, and since then I also presented the effort in a couple of different venues: I made a live demo of the Janus NDI plugin in action during the ClueCon Dangerous Demos session, involving live feeds of people from all over the world, and I had the chance to describe the effort in a bit more detail in a nice chat with Arin Sime as well.

Of course, we didn’t sit on our ends in the meanwhile! Taking note of the huge interest people had shown in this, we started a partnership with Dan Jenkins and Nimble Ape, and while there’s still work to do (more on that in a minute!) this led to the first small annoucement you can see below.

Before addressing what broadcaster.vc is and how it will work, though, let’s first go through some of the changes the Janus NDI plugin went through, and what we did to try and turn a cool experiment in something more reliable and usable in the real world as well.

Where were we?

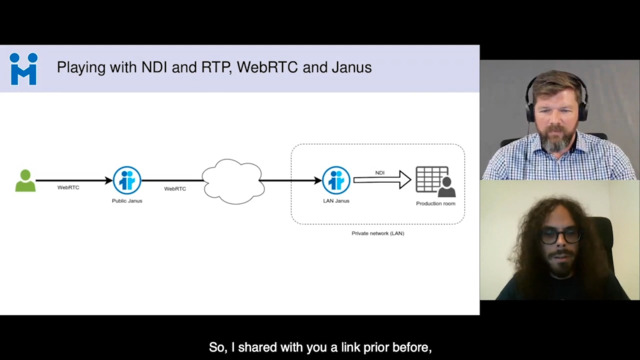

In a nutshell, the NDI plugin was conceived to be able to receive a WebRTC stream (via Janus), and have it translated to an NDI feed in real-time. This immediately raised a first question: if we want to receive NDI feeds for a conversation taking place between arbitrarily remote participants, what’s the right topology for this? This led us to the approach depicted in the following scenario, where actually two separate Janus instances are part of the scenario:

- a public Janus instance serving the conversation itself between remote participants (e.g., via the VideoRoom SFU plugin);

- a private Janus instance, hosting the NDI plugin and in the same LAN as the NDI consumers, configured to receive the videos from the public instance.

This approach hasn’t changed, and as we’ll see later is actually at the foundation of how broadcaster.vc was conceived. That said, there were things that needed to be addressed, before the NDI plugin as it was written at the time could actually be used.

Improving the plugin!

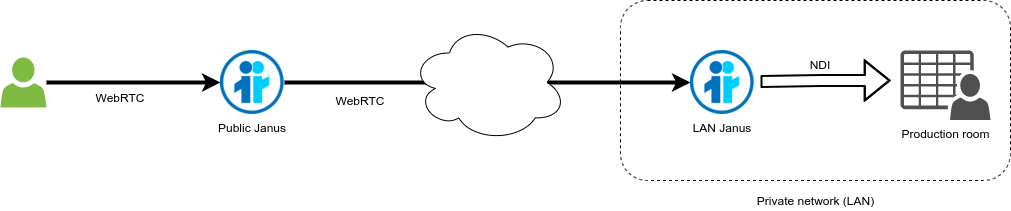

In fact, being mostly a demo or POC, the plugin had some key limitations. An important one was that the audio and video streams were decoded as soon as they were received: this is normally not an issue when everything is running locally, but it can be the moment the component needs to interact with applications that run over the internet. In fact, packet losses and out of order packets obviously confused the decoders, as you can see from the picture below (no, Dan’s house was not on fire!).

This was fixed by basically adding a simple configurable jitter buffer to the plugin, in order to “pace” the incoming traffic before actually decoding it. While this adds a small delay to the processing, this is how all RTC applications dealing with audio and video streams work anyway, and the effects on the NDI feeds were neglectible. We added support for RTCP PLI messages as well for those cases where, despite the buffer, video decoding could still result in errors anyway.

Another limitation was in codecs support. In fact, to keep things simple, the original Janus NDI plugin only supported VP8 for video: this made sense for a simple demo, but less so for a component meant to interact with heterogenous WebRTC applications in the wild. As such, we added support for VP9 and H.264 as well, which basically meant adding the means to properly depacketize the payloads before feeding them to the decoder. We do have code for H.265 and AV1 as well, but since they’re not globally available yet, we decided to keep them out of the plugin for the moment.

Audio saw some overhaul as well. While we didn’t have to add any other codec at all (to quote Monkey Island, “when there’s only one candidate, there’s only one choice”  ), we did add support for stereo streams, which we thought would be beneficial from a broadcaster’s perspective: and besides, double bars are cooler in OBS anyway!

), we did add support for stereo streams, which we thought would be beneficial from a broadcaster’s perspective: and besides, double bars are cooler in OBS anyway!

Argh, the video keeps changing resolution!

This seems to be one of the things that annoys broadcasters the most, when they deal with NDI feeds. As people familiar with WebRTC probably know already, this is actually quite normal in WebRTC: while you do your best to capture video at a specific resolution, browsers may decide at any time to shrink/expand the video depending on different circumstances, like feedback coming from the network and the RTCP stack, or other aspects like CPU usage on the machine.

This is actually not a bad thing at all, and it’s a clever way of saving resources: nevertheless, it can be problematic for those dealing with the incoming video if they’re not prepared for it, or if they’d rather receive a consistent resolution instead (e.g., for recording purposes). Within the context of NDI, this was partially an issue for us as well: in fact, the original Janus NDI plugin translated the WebRTC stream to the NDI feed using the same exact resolution, meaning that changes in resolution on the WebRTC sude would be reflected via NDI as well.

While there are ways in some NDI consumers to “lock” the rendering of incoming feeds to a specific resolution (OBS has a property for that), we thought it would make sense to optionally configure a mechanism in our plugin itself to enforce this consistency: i.e., provide a target width/height for the NDI stream to have, and always scale the incoming video to that, no matter the actual resolution.

This will probably be a very welcome feature, but it should be noted that it won’t solve the issue in ALL cases: in fact, while for video streams coming from webcams you can safely assume a fixed resolution to refer to (it’s easy to check the MediaStream track and use that info in the plugin), the same may very well not be true for screen sharing sessions, where people may be sharing applications and dynamically resizing the windows throughout the session. At the time of writing, the NDI plugin doesn’t take aspect ratio into account, which means that, if scaling is forced via the plugin API, the incoming video may be stretched if the ratio of the target resolution doesn’t match (which might definitely be the case of application sharing with resized windows). One of the things we plan to do next is to actually involve aspect ratio in that case, in order to “fit” the scaled video in the target frame, and possibly fill the rest with some background or back bars (the jury is still out on that!).

Logos and stuff

Another feature that came to mind were custom graphics: what if people wanted to watermark videos somehow? It’s usually safe to expect that this kind of branding will most of the times take place in the broadcasting application itself, that is the application consuming the NDI feeds. Nevertheless, there may be cases where it may be helpful, or needed, to hardcode something on the video itself.

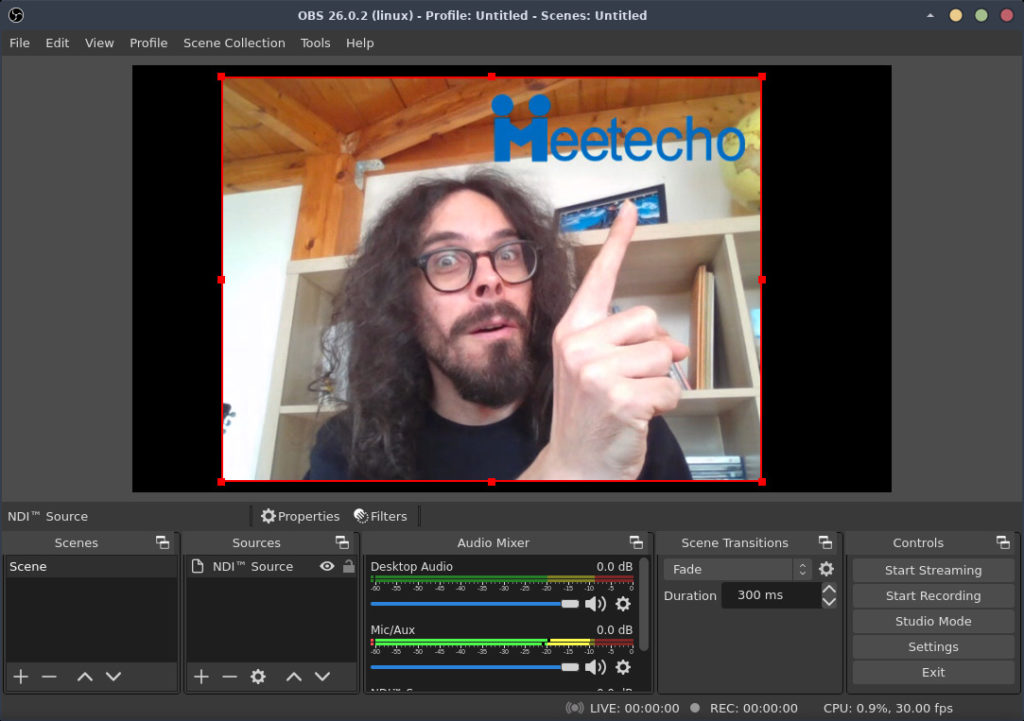

This is how we came up with a simple watermarking feature in the plugin. The way it currently works is pretty basic: you provide a path to an image you want to use (a local file or a web resource), you specify the size it should be scaled to, and finally indicate the coordinates where it should be “painted” on top of the video. In the screenshot below you can see how that works with the Meetecho logo not managing to cover my face enough.

A cool thing is that, once a watermark has been created and added to the video, you can actually dynamically change the coordinates of where it will be painted, e.g., to put it in the top-right corner in some cases, or bottom-left in others.

As you can imagine, though, considering both size and coordinates are absolute, this currently works as expected only if you enforce a static resolution as we’ve seen in the previous section: in fact, with video frames that may change in size depending on the network/CPU of the sender, this may result in misproportioned or misplaced watermarks depending on whether or not the resolution matches the one the size/coordinates were conceived for.

That said, while there’s of course room for improvement, we think this will be a useful feature as it is already anyway. We plan to take advantage of it ourselves, for instance, to provide free-to-use demo versions of the plugin where a logo will always be hardcoded on all NDI videos.

How to test if NDI actually works?

This was another interesting aspect to consider. Without delving too much into details, NDI basically uses mDNS for discovery within the LAN: this means that streams will only be visible and comsumable if the parties involved can actually see these messages flowing around. As such, we needed a way to quickly test whether or not a private Janus instance shipping our NDI plugin could actually be seen by other NDI applications in the same network, without the need to actually create a WebRTC-to-NDI session for the purpose.

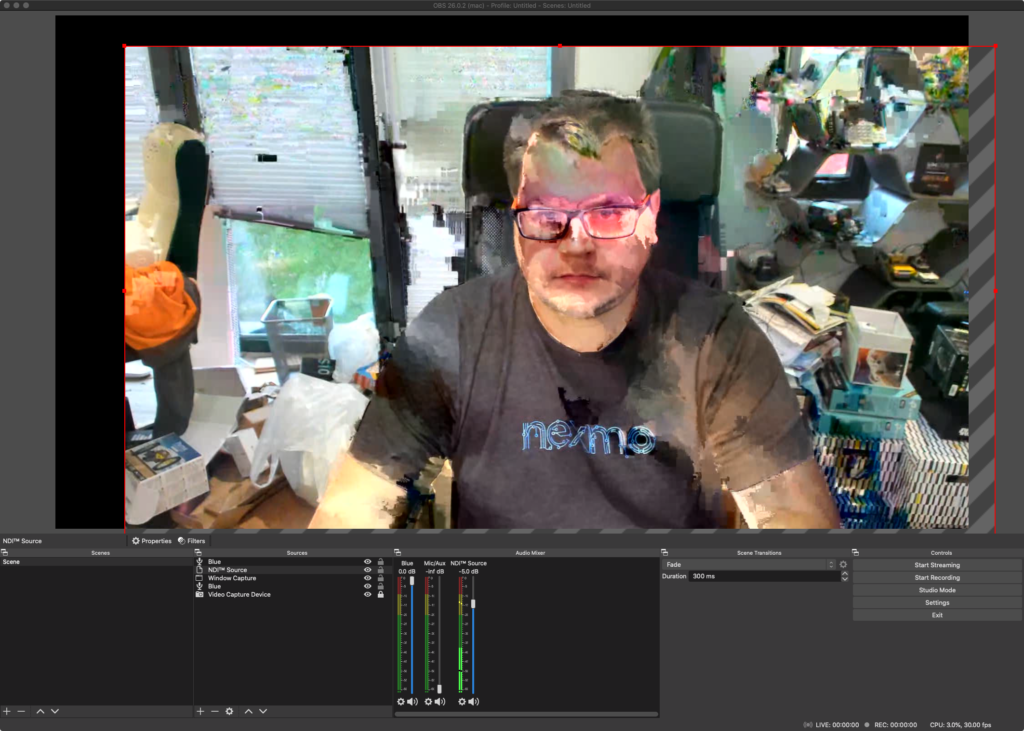

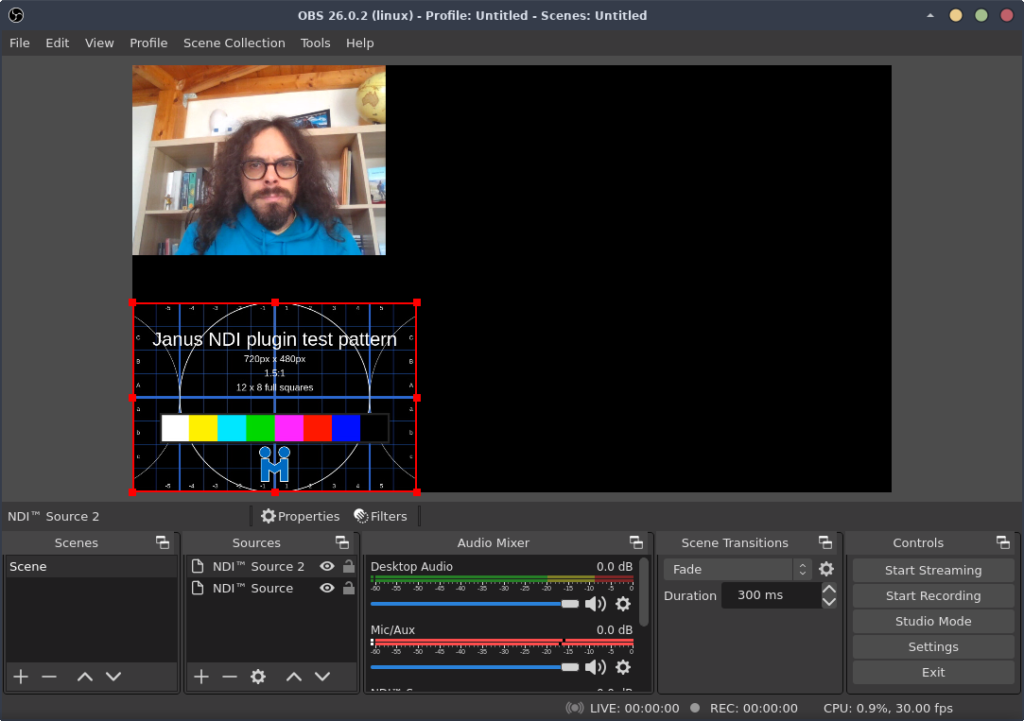

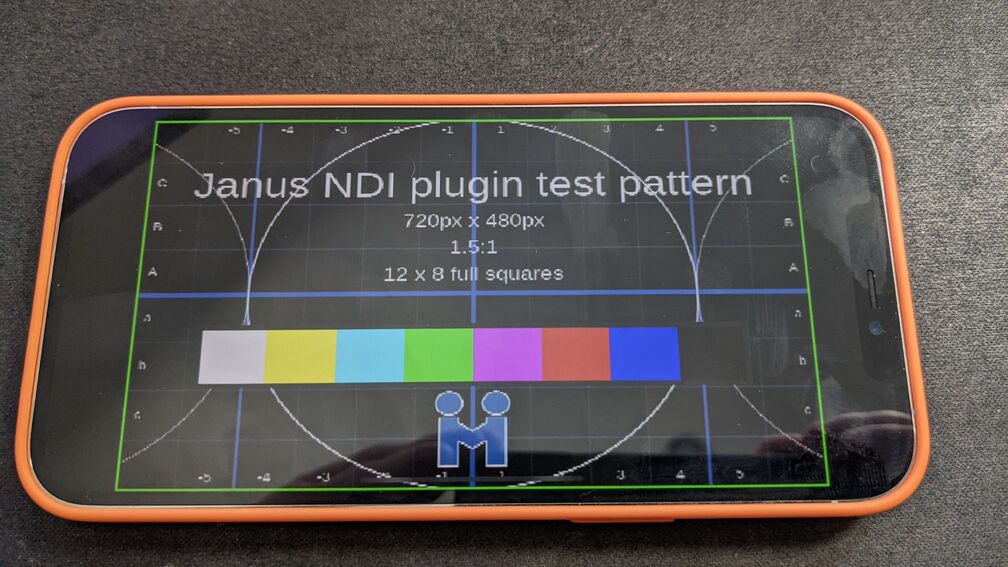

We solved this with a simple approach, that is a triggerable feature that would send a static test pattern via NDI: basically, we added an API method to the plugin that, when invoked, would create an ad-hoc NDI sender and feed it with a static image on a regular basis, to simulate a 30fps video stream. This made it very easy to test whether or not the setup was functioning: just start the test pattern, and see if you can see it in your NDI application! If you can, then the WebRTC-to-NDI sessions will work too. The picture below shows OBS displaying NDI feeds coming both from the static test pattern and a WebRTC feed (my ugly face).

And doesn’t it look great on Dan’s phone too?

Yeah, yeah, that’s enough, where do I get it?

While the plugin isn’t currently available anywhere, it soon will! As anticipated in the intro, we partnered with Dan to create a service that’s supposed to make it very easy to host online conversations via WebRTC, and have them pulled automatically in your network to be consumed via NDI.

If you’re wondering how broadcaster.vc will work, that’s it in a nutshell:

- a Janus-based conferencing infrastructure will be provided as a service by broadcaster.vc itself;

- you create an account and create the rooms you want to use to have online conversations with remote participants;

- you download a customized docker image that contains a Janus instance with the NDI plugin, and run it in your network;

- the moment the conversation happens online, the docker instance in your network will automagically subscribe to the active streams, and turn them into NDI feeds;

- you do your magic with your favourite NDI application!

That’s really it. It’s conceived to be as simple as explained above! Of course there will be some nits to fix before this is actually available, but that’s the plan: if you’re interested in seeing how it works (and I know for a fact many are already!), you may want to get in touch with Dan for a demo.

In case you’re not interested in subscribing to the service, and would like to use/orchestrate the Janus NDI plugin on your own with your own service instead, we’re planning on making it available separately as well, via a purchasable license. We don’t have any details on this part yet (just as there currently isn’t any pricing information for broadcaster.vc either), but there soon will, so stay tuned! As anticipated, we’ll probably make available a limited version that can be used for testing purposes, so that you can decide for yourself whether it does address your requirement or not.

That’s all folks!

Hope you enjoyed this update: working on all this has been quite fun so far, and so we’re definitely excited to bring it to the world!