It’s been a while since last time we spoke of WHEP: just to brush up your memory, it was a few months ago, when we first introduced WHEP as a companion protocol to WHIP, that we had talked about a few other times already.

At the time, we explained how WHIP and WHEP both play a quite important role in the future of WebRTC-based broadcasting. WHIP (WebRTC-HTTP ingestion protocol) is a protocol that was conceived to facilitate the process of media ingestion via WebRTC, something that in “traditional” broadcasting is usually done by means of protocols like RTMP. WHEP (WebRTC-HTTP Egress Protocol), on the other end, takes care of the distribution process, that is how to ensure viewers and subscribers can consume a stream via WebRTC instead. From this very brief introduction it’s clear that, although it’s not mandatory for both of them to be used at the same time (WHIP could feed stream only consumed via HLS, or WHEP could be used to consume streams fed using other means), combining WHIP and WHEP is indeed the easiest way of getting truly ultra-low latency broadcasting of your streams, since WebRTC would be used on both ends. That can be huge in contexts where the lowest latency possible is important, like the broadcasting of live sport events, betting, gaming, etc.

That said, while work on WHIP is basically done, and an RFC may be ready soon, work on WHEP has just started: it’s not even a working group document yet. In its short life, there have already been some major changes in its design (some of which, spoiler alert, I’m totally unhappy about), and it’s likely that in the next few iterations it will change even more. As such, I thought I’d spend a few words on where we started and where we’re at, with a few considerations on the prototype work I’m doing on the protocol.

How does WHEP work?

Just as WHIP, WHEP was conceived to be as simple as possible: no complex protocol mechanics, just an exchange of HTTP messages to get the WebRTC negotiation process rolling and, once a PeerConnection is ready, you’re basically done. This was a choice made on purpose, in order to make it as easy as possible to switch from existing (and often well-established) technologies to WebRTC instead. It was indeed one of the key selling points of WHIP, which had to somehow present itself as a credible alternative to RTMP, which has been historically very easy to configure in streaming applications: luckily, this seems to have started convincing more and more people, and there’s even ongoing efforts of adding WHIP support to very widespread applications like OBS.

As we’ve explained in previous blog posts, WHIP uses a single HTTP dialog to setup a WebRTC ingestion session: you send your SDP offer in an HTTP post, and the WHIP server provides its SDP answer in the HTTP response. If you don’t take into account ICE trickling, that may involve a few additional HTTP requests, that’s all you need to setup a session with WHIP: once the HTTP dance is done, it’s up to the WebRTC stack to do its job, and that’s something we’ve done a gazillion times.

WHEP started with the same assumptions, but initially with a twist. Specifically, while in WHIP we could always assume the client would take the initiative and generate an SDP offer, in WHEP you had two different modes, exemplified in the diagrams below.

In a nutshell, WHEP assumed that a client could do either of two things:

- just as in WHIP, originate an SDP offer (stating their intention of receiving specific media), and wait for an answer from the WHEP server, or

- ask the WHEP server to provide an SDP offer instead (with a description of the media session), and then provide the SDP answer in a further exchange.

While this made sense, it also caused a few troubles, since it meant WHEP servers (and clients) could operate in two completely separate modes, basically non interoperable with each other. Without specific guidelines or requirements, this could easily get to a point where some WHEP servers would only implement one, and some WHEP clients would only implement the other, thus causing them not to be able to interoperate, despite them implementing the same specification.

To address this, the latest WHEP specification got rid of one to only remain with a single viable mode. The problem being, IMHO they got rid of the wrong one, which got me quite pissed…

Why u angry, Lorenzo? Grumpy old man syndrome?

Well, I wish I could say I’m getting grumpier with age (and I am getting older but, as I always say, unlike whisky I’m unfortunately not getting any better in the process), but the truth is I’ve been grumpy since I was much younger, so that’s not it  .

.

As we were saying, WHEP got a new draft, and one of the modes we introduced was axed. Specifically, the second one in the list, that is the one where the WHEP server would originate the offer and the WHEP client would provide the answer. This means that WHEP now works exactly as WHIP: in both protocols, it’s the client’s responsibility to provide an offer, and the server’s to answer. Sergio, the author of the specification, justified this as necessary to allow servers to not bind to any specific codec, in case the endpoint was not fed by any actual media yet. While I can understand the motivation, I’ve said multiple times already in different places that I was totally unhappy with this decision, for many different reasons.

An important one, of course, was quite simply selfish: in Janus, we use the Streaming plugin to provide WHEP functionality via our Simple WHEP Server, and the Streaming plugin always sends offers itself. This is a design choice we made since the very beginning: when there’s media to send a subscriber, it’s the server that knows what the session will look like, and so it just makes sense that it’s the server that will provide an offer with the available media, that the client can then either accept or reject. Needless to say, a WHEP version that completely reverses that process made very little sense to me: why should the client prepare an offer, when they may not even know what’s on the table? Why allocate recourses that could get wasted? At the end of the day, this basically meant that Janus, as it is, cannot be used without considerable changes (more on that later), and that was enough to get me pissed off already, especially considering I’ve been one of the strongest proponents of WebRTC based broadcasting for a very long time (my Ph.D thesis on SOLEIL many years ago was exactly on that before it was even a thing).

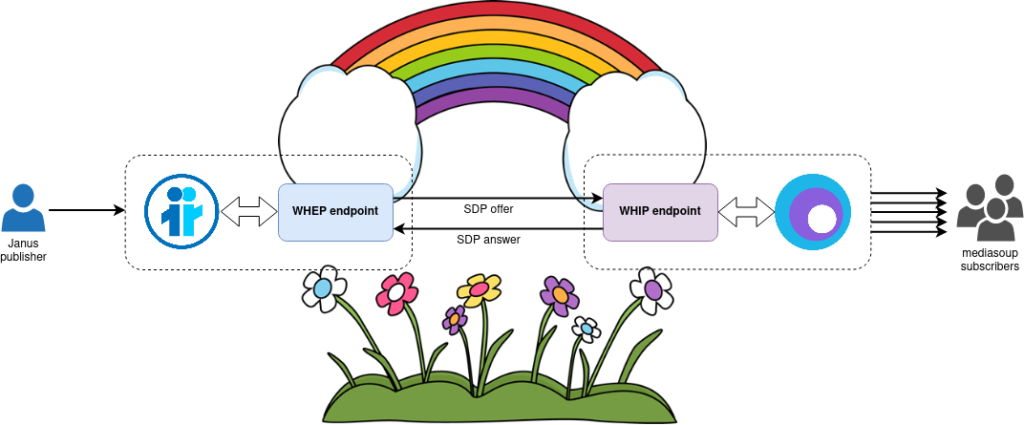

That said, my reasons to be unhappy about the change were not solely selfish. Besides the “it doesn’t make sense to me” part, there’s also the fact that I think we’re wasting a great opportunity to have basic WebRTC federation. In a world where in WHIP the client sends the offer, and in WHEP the client expects the offer instead, it means that you can indeed chain WHIP and WHEP any way you want: it means, for instance, that you can take a WHEP endpoint, and use it as a source of media for a WHIP endpoint. To make a simple practical example, if the WHEP endpoint is backed by Janus, and the WHIP endpoint is backed by mediasoup, we’ve basically managed to take a stream published in Janus and ingest it in mediasoup using a standard protocol, independently of whatever protocol the two media servers are using. It was a powerful feature, and one that could have been groundbreaking, especially considering how important federation has been recently (Mastodon anyone?) and how Matrix has been trying to do exactly that for a while for WebRTC itself.

With WHIP and WHEP now both assuming it’s always the client that offers, though, that becomes impossible. In fact, looking at the diagram above it becomes immediately obvious that, in that case, neither server would ever take the initiative (they’d both be expecting an SDP offer from outside), meaning no negotiation could ever happen among them, as shown in the picture below.

That said, it’s clear that, while federation was indeed a really cool “side effect” of the specification, it was never an actual or desired feature of the protocols: as such, the fact it’s now no longer possible shouldn’t be seen as a failure. That said, I still consider it, at the very least, a wasted opportunity.

But, as anticipated before, one other reason for hating this change was mostly selfish, so let’s address that now.

So we have to change Janus, huh?

We explained initially that our WHEP server prototype uses the Streaming plugin in Janus to implement its WebRTC broadcasting functionality. In fact, the Streaming plugin is indeed optimized for exactly that task, and is easy to feed with media to restream from different sources, including (but not limited to) other Janus instances, as we explained in a previous blog post.

That said, we also hinted at the fact that the Streaming plugin, in its current form, always takes the initiative when it comes to WebRTC negotiation. In fact, while it’s users that originate a request to subscribe to a specific stream (as it should be), that always results in the Streaming plugin preparing an SDP offer for them to evaluate and provide an answer to. The reason for this is quite simple: since the Streaming plugin knows what the stream contains (e.g., an audio only stream, or an audio/video stream where audio is Opus and video is VP8, or a collection of three audio streams and 4 video streams), then it makes sense for the plugin to describe, in an SDP, what media is available to the subscriber, including info on which codecs are in use, which profiles, etc. The user can then decide what it wants to (or can) receive, and prepares an SDP answer accordingly, and that eventually results in a WebRTC PeerConnection being created for the actual media delivery.

This pattern has been in place since day one of Janus, and is actually used in other plugins too, whenever there’s a monodirectional delivery of media from Janus to users. The VideoRoom plugin uses the same approach for subscriptions, for instance, and so does the Record & Play plugin whenever you want to replay a previously recorded message. We never had any reason to change this approach since, again, it just makes sense. Even just thinking of the Streaming plugin, for instance, it allowed us to very easily add RTSP gatewaying functionality to the plugin, since RTSP (another well known and widespread streaming technology the IETF came up with) basically always did the same thing too.

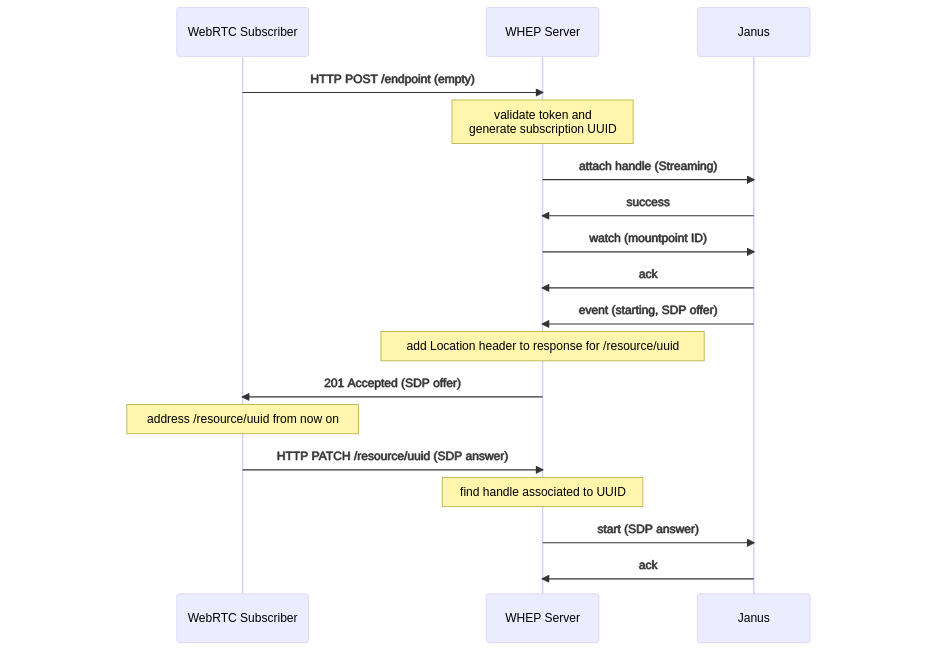

This is what made it quite easy for us to use the old WHEP “mode 2” to add WHEP support to Janus, which as explaind in the previous blog post looked a bit like this.

With the previous specification, it was easy to use an empty HTTP POST from a WHEP subscriber as a trigger to have the Streaming plugin originate an SDP offer to send back, and then use the resulting SDP answer from the subscriber to close the circle and take advantage of the Streaming plugin’s broadcasting capabilities.

As we’ve repeatedly said in this blog post, though, that’s now no longer possible. WHEP subscribers will now always generate an SDP offer themselves, and trying to send that offer to Janus as part of an exchange with the Streaming plugin will simply not work. The plugin will ignore it, and the session will go nowhere, meaning no WebRTC PeerConnection will ever be established. This means that, if we want Janus to be a WHEP server option just as for WHIP, then we need to make some changes, which means:

- either write a completely new plugin that accepts SDP offers but broadcasts media (yeay, right), or

- we modify the Streaming plugin to allow incoming offers too (ew).

Needless to say, I went for the second option, which is currently available in a dedicated pull request.

The effort wasn’t trivial, and further uncovered and highlighted why I didn’t like the approach. The main thing I didn’t like was the complete disconnect between what subscribers think they want and what’s actually on the table. As explained before, the main reason why the Streaming plugin normally sends the offer is that the plugin knows what’s available: if the mountpoint has one audio stream and two video streams, that’s what you’ll get in the offer. The moment it’s the subscriber that’s sending the offer, how do you reflect that? While in Janus there are ways to query a mountpoint about its capabilities, meaning you can then prepare an offer to receive what you want accordingly, there’s no such thing in WHEP. You don’t know what a WHEP endpoint will provide in advance, so you have to prepare an offer completely blind, and hope what you ask for matches what’s available somehow.

This translated to a few ambiguities when I had to write the code too. How to handle, for instance, an incoming offer that includes an audio and a video stream, when the mountpoint the offer is for includes, let’s say, two video streams? Do you automatically try and match the first video stream with the m-line in the offer? Why the first and not the second? How do we know what the subscriber is interested into, when multiple options are available? The way I handled it in the code is indeed an ordered based match, trying to take codecs into account: if there’s two video streams in the mountpoint and only one in the offer, we try to match the first one; if there’s no codec match on that, we try the second; and so on and so forth. Everything else is dropped, which will sometimes inevitably result in “crippled” sessions: ideally, WHEP sessions will mostly be streamlined enough that it’s never an issue (e.g., one audio and one video stream), but that’s something that may change in the future (e.g., multiple audio channels for different languages, datachannels used for additional features, etc.).

Anyway, with the Janus part taken care of, the next step was updating the WHEP prototype accordingly.

The (not so) Simple WHEP Server

In the previous blog post, we introduced Simple WHEP Server, a simple API translation shim that sits between Janus and WHEP subscribers: this component simply talks WHEP on one side, and the Janus API on the other, thus taking care of translating the signalling layer so that WHEP subscribers and Janus can eventually create a WebRTC PeerConnection between each other. It’s the same exact approach we followed for WHIP as well.

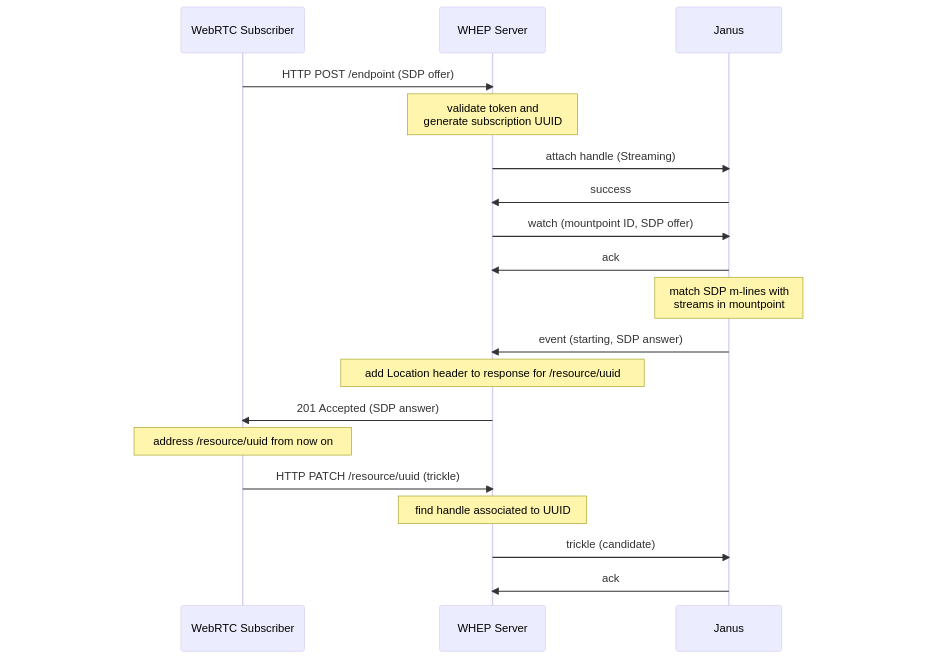

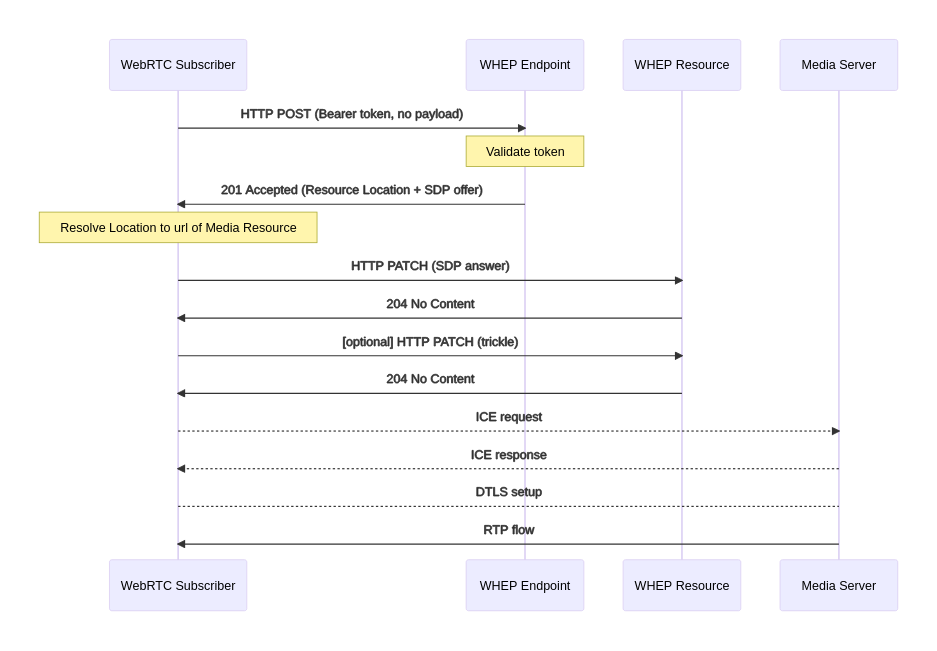

We explained initially how this involved using WHEP’s “mode 2” with the Streaming plugin, but that obviously had to change with mode 2 now not being available anymore. With the Streaming plugin now also able to receive SDP offers (using the above mentioned PR), this meant changing the shim code to allow incoming SDP offers too, and using the updated Streaming plugin API accordingly in case. This new approach is sketched in the sequence diagram below.

These changes were implemented in yet another pull request, specifically this one. At the moment, this PR doesn’t get rid of ye old “mode 2” we used before, but adds the subscriber-offers mode as a new thing you can take advantage of: the main reason for this is that the functionality has not been merged in Janus as of yet, which means it wouldn’t work with currently available versions of Janus until it is.

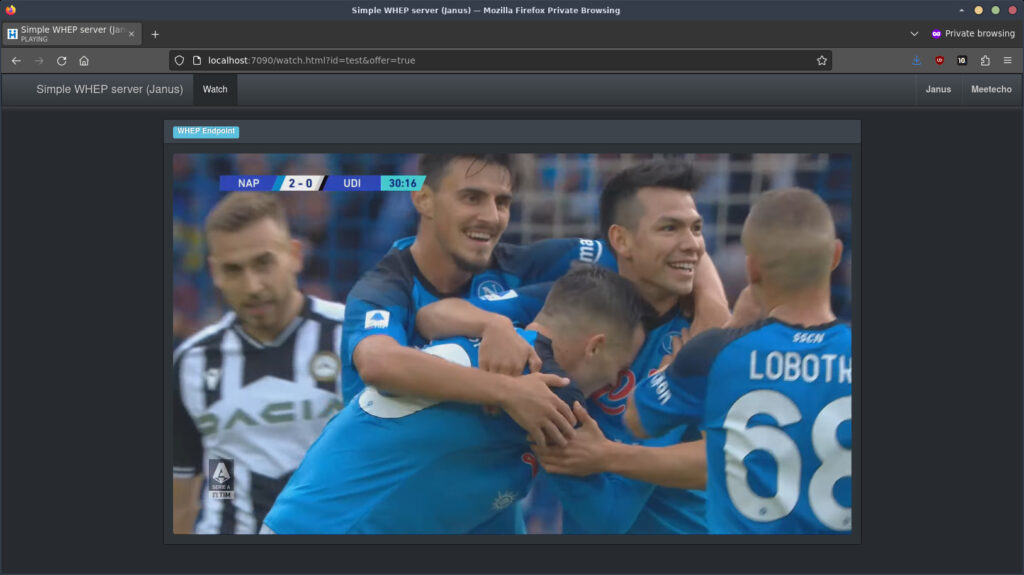

Testing this is relatively straightforward, since it only requires adding a query string argument to the watch.html demo page, which is a WHEP player testbed for endpoints created in the server. Specifically, if you add a offer=true query string argument to the page when subscribing to a WHEP endpoint, the web page will be configured to generate a recvonly SDP offer (defaulting to both audio and video), and send that to the WHEP server; in turn, the WHEP server will use that offer in its exchange with the Streaming plugin via Janus, and relay back via the WHEP response the SDP answer it will get from Janus. As you can see from the screenshot below (mandatory reference to the exceptional season of SSC Napoli included), this works exactly as expected.

Notice that, while I’ve updated the integrated WHEP player demo in the WHEP server repository to test this new mode, I haven’t done the same with our GStreamer-based Simple WHEP Client as of yet. The main reason for this is that updating the client too would require way too much effort that I’m willing to devote to it now. Besides, GStreamer developers implemented native support for both WHIP and WHEP already, and while this support is not widely available yet (they’re part of the gstreamer-rs plugins, which not all distros ship at the moment), that may change in the near future, making our prototype client less useful for testing purposes. If you’re willing to contribute a patch to make that possible, though, we’d definitely be happy to review the changes.

What about Server-side Events (SSE)?

Oh, yes, almost forgot about that. One more big change that occurred in the latest WHEP draft is the newly added support for Server-side Events (or SSE for short) to provide live events to WHEP subscribers about the stream they’re receiving. This can include information about the number of people currently subscribed to the feed, whether the stream is live or not, and also details about the simulcast/SVC nature of the video, if any. We already started implementing this functionality in our Simple WHEP Server, so if you’re interested in giving it a try do check out the related pull request: it’s based on the one that adds support for client offers, rather than the main branch, so testing that you’ll actually kill two birds with one stone (ugly metaphor, I know; please don’t kill anyone!).

Different alternatives were discussed to provide this event functionality, including long polling, WebSocket, or QUIC. Eventually, SSE were proposed for the sake of simplicity, as they provide a very simple, and standard, mechanism for providing events that a server generates (hence the name). Despite SSE being based on HTTP, they don’t work using long polls, which makes their overhead quite minimal: in fact, they rely on text streams instead, where the client simply sends an HTTP request asking for events, and then multiple events can be sent over time in response to that request, only closing the dialog when you’re done. In a nutshell, from a sequence diagram perspective it looks a bit like this.

On the client side, things are quite simple, since all you have to do is creating an instance of EventSource with the address you want to contact for events, and then registering a listener for the events you’re interested in, e.g.

var source = new EventSource('updates.cgi');

source.onmessage = function (event) {

alert(event.data);

};for generic events, or

var source = new EventSource('updates.cgi');

source.addEventListener('add', addHandler, false);

source.addEventListener('remove', removeHandler, false);for intercepting different event types.

In the current version of WHEP, server events are an optional feature that can be advertised within the context of the HTTP dialog used to exchange SDPs. Specifically, just as with ICE servers, a Link header is provided in the response in that case, with info on what’s available. An example is provided in the snippet below:

POST /whep/endpoint/test HTTP/1.1

[..]

HTTP/1.1 201 Created

[..]

Link: </whep/sse/QUsW6uOyJrV3mb6K>; rel="urn:ietf:params:whep:ext:core:server-sent-events"; events="active,inactive,layers,viewercount"

[..]This example, which comes from the implementation in Simple WHEP Server, shows how the WHEP server responds to the subscription with details on how to subscribe to events: specifically, as anticipated a Link header is added with a rel value of urn:ietf:params:whep:ext:core:server-sent-events, which tells the subscriber they can contact the provided address for events; besides, the server is also providing info on which events are supported specifically, which in this case are active, inactive, layers and viewercount.

What’s important to point out is that the address provided in the Link attribute is not the one you pass to EventSource: as per the WHEP specification, you first need to send an HTTP POST to the provided address with the list of events you want to receive (typically a subset of what was provided in the WHEP response), and the response to that POST will include a Location header with the actual address of the event source. This means that, at this point, an interested WHEP subscriber can create an EventSource as shown above, and register listeners for the event they’re interested in. The list of steps that were just described is summarized in the sequence diagram below (from which, for the sake of simplicity, all internal steps related to WHEP itself are omitted).

Notice that in the prototype implementation we realized for the Simple WHEP Server, the address you issue the POST to and the event source itself are actually the same thing, meaning that the same address is used to initialize the source and then consume it. This is because the address actually references the subscriber, rather than the WHEP endpoint, which may be needed in the future in case subscriber-specific info can be returned too, rather than generic events that are common to all subscribers.

Once SSE is in place, from a protocol perspective as anticipated the events are provided as a continuous stream of text over a persistent connection originated by an HTTP GET. This means that, if we issue a GET via an EventSource to the address provided in the response we’ve seen before:

GET /whep/sse/QUsW6uOyJrV3mb6K HTTP/1.1

Host: localhost:7090

User-Agent: Mozilla/5.0 (X11; Linux x86_64; rv:109.0) Gecko/20100101 Firefox/112.0

Accept: text/event-stream

Accept-Language: en-GB,en;q=0.5

Accept-Encoding: gzip, deflate, br

DNT: 1

Connection: keep-alive

Referer: http://localhost:7090/watch.html?id=test&offer=true

Sec-Fetch-Dest: empty

Sec-Fetch-Mode: cors

Sec-Fetch-Site: same-origin

Pragma: no-cache

Cache-Control: no-cachethe response we’ll get will look like this:

HTTP/1.1 200 OK

X-Powered-By: Express

Access-Control-Allow-Origin: *

Cache-Control: no-cache

Content-Type: text/event-stream

Connection: keep-alive

Date: Mon, 17 Apr 2023 13:50:45 GMT

Transfer-Encoding: chunked

d

retry: 2000

13

event: viewercount

19

data: {"viewercount":1}

e

event: active

a

data: {}

13

event: viewercount

19

data: {"viewercount":2}

13

event: viewercount

19

data: {"viewercount":1}

10

event: inactive

a

data: {}As you can see, the content type is text/event-stream with a chunked transfer encoding, which means the response will be updated over time as new events become available. In this specific example, we see a few viewercount events (telling us of subscribers coming and going), an active event (telling us that the WHEP endpoint is now fed with live WebRTC data) and an inactive event (telling us that no media is flowing through the endpoint now). The data you see comes from the prototype implementation we added to the WHEP server, and as such is not really representative of what you should actually get (there’s still some ambiguity on what some events should look like, since the specification is lacking in that department), but is nevertheless a good example of what SSE looks like in the wild.

From a client perspective, as anticipated things are quite easier, as you don’t really have to do any manual processing of the stream of events we’ve seen above. Let’s check, for instance, what our demo does with respect to SSE:

function startSSE(url, events) {

console.warn('Starting SSE:', url);

$.ajax({

url: url,

type: 'POST',

contentType: 'application/json',

data: JSON.stringify(events)

}).error(function(xhr, textStatus, errorThrown) {

bootbox.alert(xhr.status + ": " + xhr.responseText);

}).success(function(res, textStatus, request) {

// Done, access the Location header

let sse = request.getResponseHeader('Location');

console.warn('SSE Location:', sse);

let source = new EventSource(sse);

source.addEventListener('active', message => {

console.warn('Got', message);

});

source.addEventListener('inactive', message => {

console.warn('Got', message);

});

source.addEventListener('viewercount', message => {

console.warn('Got', message);

});

source.addEventListener('layer', message => {

console.warn('Got', message);

});

});

}The startSSE function has indeed the task of setting up the events subscription. As we’ve discussed before, the first step is always issuing an HTTP POST to the address the WHEP response gave us back via a Link header: this is what the function does with an XHR request, which includes a JSON encoding of the events we’re interested into. In case that request succeeds, we then read the Location header, and use the returned value as the address we’ll pass to a new EventSource instance: at this point, we add separate listeners for all events that we’re aware of. At the time of writing, we do nothing more with these events than printing them on the console for debugging purposes, but there could be fancier things you could do with those instead, e.g., dynamic buttons depending on the active/inactive status of the endpoint, or a specific labels to print the number of currently active subscribers.

Notice that, at the time of writing, only the viewercount, active and inactive events have been implemented. More precisely, viewercount has been implemented simply by intercepting the requests in the WHEP server itself: this means that it can accurately track subscribers in the current instance, but doesn’t really do anything with requests to the same WebRTC resource that may be handled via other servers instead, e.g., other WHEP server instances. Again, since this is just a prototype, that’s fine for now. For the active and inactive events, instead, we had to add some sort of probing to the WHEP server, in order to allow it to monitor whether or not the associated mountpoint in the Streaming plugin on the Janus server was actually fed with data or not: at the moment, this is done by simply polling the plugin on a regular basis (sending an info request to know the current status), which again is fine for a simple prototype: in a more advanced setup, different mechanisms may need to be used instead (e.g., something based on event handlers, or a different push-based approach). Notice that, while the draft says a JSON payload should always be provided as part of the events data, no info is provided on what should be sent in case of active and inactive: as such, the prototype simply sends an empty object for now (which is what you can see in the SSE snippet we introduced before, for instance).

The prototype doesn’t implement the layers event yet, instead, and neither does it implement the active layer selection that the draft introduces contextually. The main reason for this is that it’s currently unclear what info those events should provide: while there are a few examples, there’s many unclear aspects in there, and it’s also not clear whether or not those events should only provide info on what’s available, or if there’s also ways to explicitly tell subscribers which layer they’re currently receiving. As a consequence, since active layer selection does depend on layers events to do their job, for the time being I decided to skip them, and get back to them at a later stage, maybe when they’re better addressed in the document.

What’s next?

We’ve covered a lot of ground in this blog post: I aimed for something shorter and, as usual, I ended up writing yet another “Divine Comedy” chapter instead! We’ve seen how much WHEP changed from when it was first introduced to its second iteration, how one of the modes disappeared, how this impacted Janus (and killed any hope of achieving a standards-based WebRTC federation in the short term) and how we had to update both the Janus Streaming plugin and our Simple WHEP Server prototype to accomodate for the latest specification. Finally, we introduced the new SSE-based event subscriptions that WHEP now provides, and our efforts to prototype that new functionality as well.

At this stage, our prototype implementation is very close to what the latest version of the specification describes. Now is the time to give it a try, and possibly test it against other implementations, to check whether or not WHEP has shortcomings to address. Hopefully, the next version of the draft will make some obscure parts of the text clearer, allowing us to implement what’s missing too. Of course, we’ll also look forward to other potential enhancements to the specification, something that we should probably expect considering many are looking to WHEP as a replacement for a well established technology that has many more features than WHEP currently has.

That’s all, folks!

I hope you enjoyed this short ride through the wonders of WebRTC broadcasting. If you’re curious about the things I’ve talked about here, please do experiment with the different prototypes we created for the purpose: the more feedback we get, the better the prototypes will be!