If you’re confused about all these acronyms, you’re not alone! Those interested in WebRTC and broadcasting have probably heard about WHIP already: WHEP and WHAP maybe not as much  And if you’re even more confused about why I chose a picture of Huey, Dewey and Louie as the featured image, that’s because in Italian they’re called “Qui, Quo e Qua”, which is the first thing that came to mind when I came up with the title. I know, it’s incredibly stupid, but please bear with me: I’ve just come back from my summer break!

And if you’re even more confused about why I chose a picture of Huey, Dewey and Louie as the featured image, that’s because in Italian they’re called “Qui, Quo e Qua”, which is the first thing that came to mind when I came up with the title. I know, it’s incredibly stupid, but please bear with me: I’ve just come back from my summer break!

We’ve talked about WHIP, a new standard protocol for media ingestion, quite a lot here: just a few months ago, we wrote a lengthy blog post on the open source efforts we devoted on it, and both at CommCon and FOSDEM we explained in detail how it could help implement broadcasting scenarios via WebRTC. That said, WHIP as anticipated only covers media ingestion: as such, it doesn’t provide any mechanism for actually distributing the ingested stream once it’s available, whether it’s via WebRTC or not.

This is where the other two acronyms find their place. WHAP is one of the proposals that were suggested as a way to distribute a WHIP ingested stream, but unfortunately not much information or documentation has ever been provided for those interested in giving it a try. WHEP, instead, is a standard proposal made by the same people that brought you WHIP, and has recently been submitted as an internet draft in the WISH working group: as such, this is indeed the protocol we’lll discuss today, for which I prototyped a simple server and client just as I did for WHIP at the time.

What’s WHEP?

WHEP stands for “WebRTC-HTTP egress protocol”, and was conceived as a companion protocol to WHIP. Just as WHIP takes care of the ingestion process in a broadcasting infrastructure, WHEP takes care of distributing streams via WebRTC instead. From a protocol perspective, in the current proposal the two protocols are very similar, and in fact Sergio joked that he just replaced all occurrences of “whip” with “whep” to get the ball rolling 😀 But in practice, there are a few key differences, due to the fundamentally different purpose of the protocols.

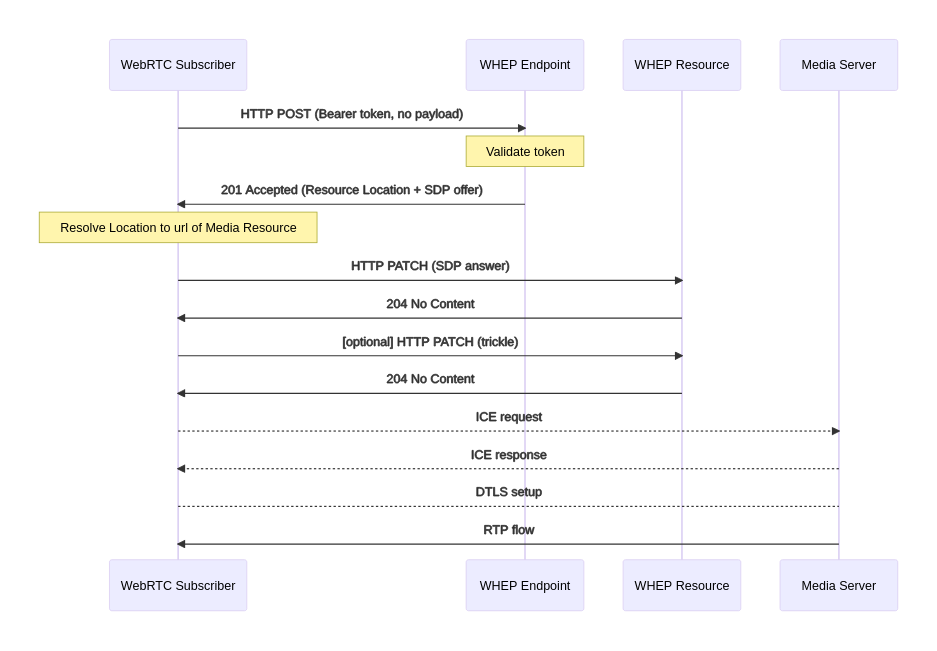

More precisely, while WHIP works under the assumption that the client will always provide an SDP offer, and the server an answer to that, in a single HTTP exchange, in WHEP there are two different modes of operation:

- in the first mode, the WHEP client (subscriber) will also send an offer to the WHEP server via POST, and the server will provide an answer back, all in a single HTTP exchange;

- in the second mode, the WHEP client will first send an empty POST message to signal their intention to subscribe to a stream, getting an offer back from the server, and then send a second PATCH message with their answer instead.

The two modes are presented visually in the diagrams below.

Both modes have pros and cons. The first mode has the advantage of completing the negotiation process in a single HTTP exchange, thus reducing the Round Trip Time (RTT), but also forces the client to allocate resources for any codec they support, independently of what the WHEP endpoint actually provides (e.g., allocating both audio and video for an audio-only stream, or allocating a dozen of video codecs when the WHEP endpoint only provides one); the second mode requires two HTTP exchanges to complete (one to ask for the offer, and one to provide the answer), but has the advantage of leaving the initiative on negotiation to the server, which knows how the stream is encoded and as such can constrain the process accordingly.

The difference between the modes is not just formal, though, and pros/cons might matter relatively, depending on the WebRTC server backing the WHEP functionality. In Janus, for instance, both the VideoRoom and Streaming plugin assume that, for subscriptions, it’s always the server that takes the initiative, exactly because it knows which codecs are used for any of the streams: as such, as Janus works today, the first mode is actually not possible to implement, and the same can probably be said for other implementations as well (no matter which mode they’d favour).

This means that, while the current version of the WHEP document is quite barebone, further revisions will definitely need to better address how servers and clients should react when either only supports a single mode of operation rather than both (e.g., in terms of error codes or expected behaviour), in order to allow for some seamless fallback to the right one in case the one they chose initially won’t cut the deal.

I won’t share other diagrams on how WHEP is supposed to work because, apart from the two modes introduced above, everything else currently works pretty much exactly like WHIP does: this means that you use Bearer tokens the same way, you trickle using PATCH on the resource URL, you tear down a subscription using DELETE and so on. For more information on how those work, you can refer to the WHIP blog post.

An open source WHEP server (based on Janus)

When I talked about WHIP last time, I also shared an open source Janus-based WHIP server implementation called Simple WHIP Server. Since I wanted to start playing with WHEP as well, I ended up doing exactly the same with WHEP, with again a Janus-based server and a client to test it (actually a couple: keep reading!).

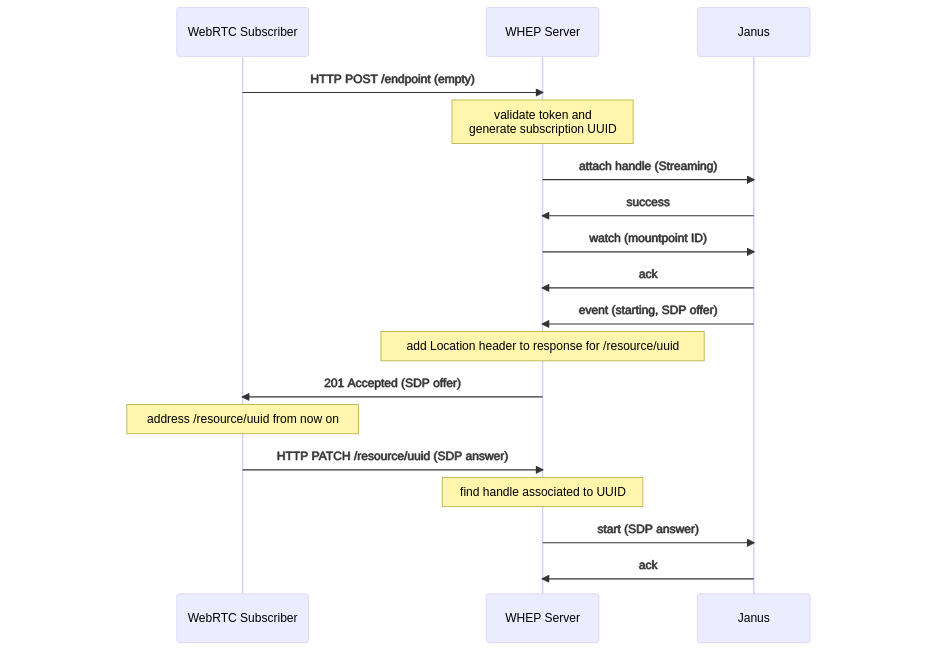

For the server, just as for WHIP I wrote a simple Express-based node.js REST server implementing the WHEP API, and had it transparently translate the WHEP interactions to and from the Janus API internally. A sequence diagram showing how WHEP was mapped to Janus is presented below.

As you can see, the diagram above implements what we called the “second mode” of operation in WHEP, that is the one where it’s the server that provides the offer, and not the client. That’s because, as anticipated before, no plugin in Janus currently allows you to provide an offer yourself to subscribe to a stream: an offer is always generated by the plugin, and so the server, itself.

More specifically, I chose the Streaming plugin as the source of broadcasts for the WHEP server, as its main purpose is indeed implementing streaming and broadcasting functionality in Janus. Besides, since it’s based on the concept of static “mountpoints” that can be fed with data to stream from different sources at different times, it seemed like a good match for WHEP endpoints, whose life cycle could also be independent of the presence of actual media producers.

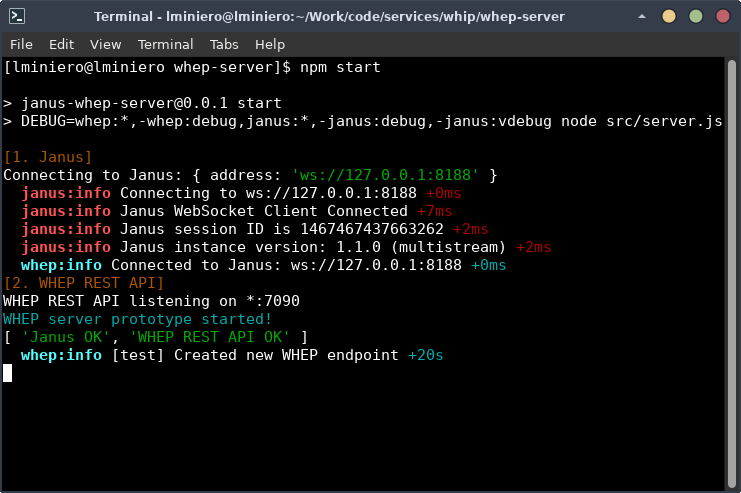

Without bothering you with more sequence diagrams (which, again, are pretty much the same as the WHIP ones, e.g., with respect to trickling or tearing down sessions), I made the current prototype implementation available as open source, and since I like to confuse people, I decided to call it Simple WHEP Server (which I’m sure you’ll agree is very different from the existing Simple WHIP Server!).

Using it is quite straightforward, as just as in the WHIP Server there’s a basic web based UI from which you can create endpoints: you specify the Janus Streaming mountpoint it should be mapped to, whether a token is required, and so on, and that’s it.

Unlike the WHIP Server, though, the WHEP Server does come with an integrated (and very simplified) web-based WHEP client too (told you there were two this time!). If you check the screenshot above, you’ll see there’s a bright green button called “Watch”: when you click that button, you’re redirected to a different web page that acts as a WHEP client for the specified WHEP endpoint. As such, it uses the WHEP API to talk to the WHEP Server to try and create a WebRTC subscription. The screenshot below shows an example that uses an endpoint mapped to a Streaming mountpoint feeding the same video we have in our online demos.

This web-based client is quite barebone at the moment, but as a simple way to test whether or not my code was working it did its job. The next step was, again, a native client.

An open source WHEP client (based on GStreamer)

For WHIP, we implemented a prototype native client based on GStreamer, called Simple WHIP Client. The main reason for working on a native solution was mostly due to the expectations people usually have when it comes to media ingestion, e.g., where they might be used to tools like OBS and similar to produce and stream their content, rather than use their browser. This meant that prototyping how a simplified native client could indeed use WebRTC for the purpose would help foster WHIP adoption (and it looks like some activity has been started to add WebRTC support to OBS just recently!).

For subscribing to a stream, a native client may seem less important, since after all we’re all used to open the browser when just watching a live stream, e.g., a webinar broadcast. That said, native clients are actually also relevant when it comes to solutions like WHEP, since many broadcasting technologies today have native endpoints (e.g., in Smart TVs or stand-alone applications). As such, I deciced to adapt the existing WHIP client to turn the tables and make it subscribe to a stream, rather than encode one. It took a bit of work but not much, so I shared that modified client as an open source prototype as well: to add even more confusion, the prototype is called Simple WHEP Client (I can understand if you hate me now; I do too).

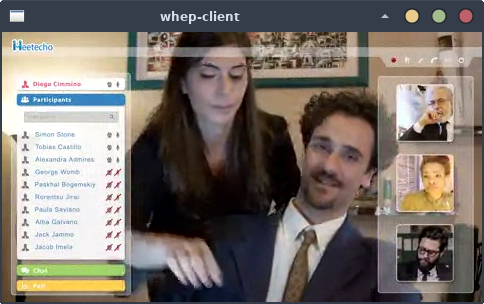

Just as the WHIP client, the WHEP client is a command line tool that uses GStreamer’s webrtcbin under the hood. It shares pretty much exactly the same command line settings, except for the need to provide an audio and/or video pipeline: since the WHEP client only subscribes to an existing stream, rather than producing one, I found there was no need to add constraints, and so I left the negotiation up to the WebRTC stack instead. This means that, when an offer is received, the WHEP client tries to handle any incoming stream that is offered, and creates a decoder (plus elements to render/play the stream) on the fly. The end result is what you can see in the screenshots below, where opening the same WHEP endpoint we used in the web-based WHEP client before ends up creating a basic window with the audio/video content. Notice that the window is really basic, as it’s very much just displaying and playing the audio/video content: there’s no controls, no information, no metadata and no UI.

It’s important to point out that, since I was testing this with the WHEP server introduced before, which is powered by Janus, the WHEP client also only implements the “server offers” WHEP mode: as such, at the time of writing it can’t work as an offering client instead, and so won’t work with WHEP servers that expect that kind of behaviour. While I might change this in the future, I don’t plan to do it in the short term, especially considering it would require some refactoring in the code: in fact, while with the “server offers” mode I could avoid the user-provided pipelines, in a “client offers” mode I’d have to add them instead, and make those flexible enough to allow for multiple codecs at the same time. I’m not proficient enough with GStreamer to know how this can be done, so I guess this can wait for now (especially considering this is a basic prototype of a very early version of a new protocol anyway!).

Testing WHIP and WHEP together

At this point you may be wondering: but do WHIP and WHEP actually work together? If WHIP allows for WebRTC ingestion, and WHEP for WebRTC broadcasting, can the former feed the latter somehow? The idea is indeed that one from a standardization perspective, even though the relationship between the two protocols is left vague and loose on purpose, as how one becomes the source of the other (possibly with multiple WHEP endpoints for scalability) is left up to the implementations.

This is true for my WHIP/WHEP prototypes as well. While you can easily create a WHIP endpoint in a WHIP server, and a WHEP endpoint on the WHEP server, left on their own they’ll be completely separated endpoints that have absolutely no relationship between each other. This is especially relevant when we look at the plugins used in the two implementations, since:

- the WHIP server uses the VideoRoom plugin for ingestion, mapping a WHIP client to a VideoRoom publisher;

- the WHEP server uses the Streaming plugin for distribution instead, mapping a WHEP client to a VideoRoom subscriber.

This means that, since two different plugins are used for the respective functionality, even if they sit in the same Janus instance they wouldn’t be able to work together unless properly orchestrated.

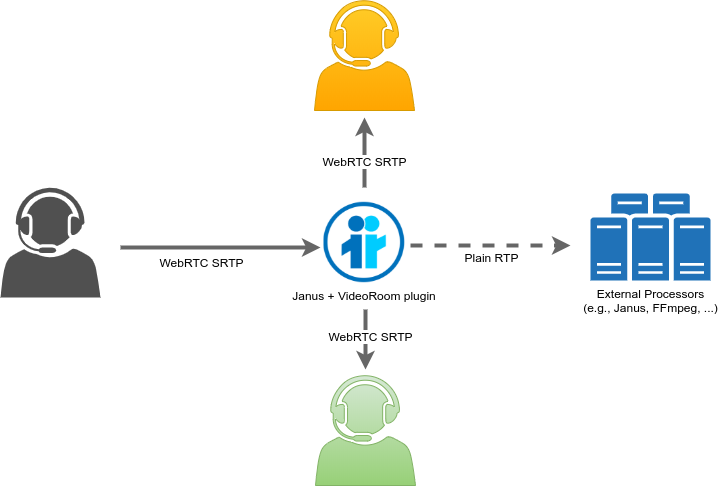

This is where RTP forwarders can come to help. In the Janus “lingo”, an RTP forwarder is an entity internal to plugins that can relay incoming WebRTC streams (e.g., coming from a WebRTC publisher) to an external UDP address via plain RTP (or via SRTP, if needed). Considering this external recipient could actually be a previously configured Streaming plugin mountpoint, this means that RTP forwarders provide with a very easy and efficient redistribution of a single WebRTC stream to multiple Janus instances for rebroadcasting via the Streaming plugin.

The WHIP client does support RTP forwarders when you create an endpoint, which means that tying a WHEP endpoint to a WHIP one in the prototypes is relatively easy:

- you create a Streaming mountpoint (e.g., on Janus B);

- you create a VideoRoom room (e.g., on Janus A);

- you create a WHIP endpoint, map it to the VideoRoom on Janus A, and configure it so that any published stream is automatically forwarded to the Streaming mountpoint on Janus B;

- you create a WHEP endpoint and map it to the Streaming mountpoint on Janus B.

At this point, you have both a WHIP and a WHEP endpoint, and any time a stream is published on the WHIP endpoint it can be broadcast by the WHEP endpoint. In this simple example we’re just feeding a single WHEP endpoint (because that’s how the WHIP server is currently implemented, with support for a single RTP forwarder), but nothing prevents you from feeding more than one instead, or possibly rely on some smarter internal distribution (e.g., a tree based topology à-la SOLEIL) to feed a lot of them at the same time to scale the broadcast to a much wider audience. If you want to use Janus for the purpose, you can find some generic guidance on this process in the presentations I made at both CommCon and FOSDEM last year.

That said, let’s check how we can make this work in practice. For the sake of simplicity, let’s assume that Janus A and Janus B are actually the same instance; besides, let’s use the VideoRoom room (id=1234) and Streaming mountpoint (id=1) that are created by default when you first install Janus, to make it easier to test. At this point, this means that we only need to create the endpoints, configure them to work together, and see if it works.

Since we need to configure RTP forwarding, we can’t use the simplified web UI to create the WHIP endpoint, but need to send a request manually. This isn’t hard, though, as it can be done with a simple curl one-liner (using a message that’s specific to this WHIP server implementation, and not part of the WHIP specification):

curl -H 'Content-Type: application/json' -d '{"id": "test", "room": 1234, "token": "verysecret", "secret": "adminpwd", "recipient": {"host": "127.0.0.1", "audioPort": 5002, "videoPort": 5004, "videoRtcpPort": 5005}}' http://localhost:7080/whip/createIn a nutshell, we’re creating an endpoint called test (which will require the Bearer token verysecret), mapping it to room 1234, and configuring it to forward the media to 127.0.0.1 (the address of the Janus recipient, localhost in my dumb test), and more precisely to port 5002 for audio and 5004 for video (plus 5005 for RTCP latching).

Now that we’ve created a WHIP endpoint and configured it to feed a specific Streaming mountpoint, we can create a new WHEP endpoint on the WHEP server and map it to that same mountpoint. This is something we can do via the web-UI since there’s no particular tweak to make, but just to show how things work under the hood, let’s show a curl one-liner for this too:

curl -H 'Content-Type: application/json' -d '{"id": "test", "mountpoint": 1, "token": "pleaseletmein"}' http://localhost:7090/whep/createWe’ve now created a WHEP endpoint test (which will require the Bearer token pleaseletmein), mapping it to Streaming mountpoint 1 (the same the WHIP endpoint is going to feed). Notice that, even though we gave the WHIP and WHEP endpoints the same name (test), they’re completely separate and independent resources, each living in its own server: I chose the same name for both just to give the impression that they’re actually the same “stream”, from ingestion to distribution, and so we can address them the same way, but again they’re separate things that are accessed via different protocols.

At this point, we can start ingesting media to the WHIP endpoint from a WHIP client. If everything goes as it should, this ingested media should be automatically forwarded to the mountpoint, so that it can be rebroadcast via the WHEP endpoint. For media ingestion, we can use the Simple WHIP Client, using a command like the following:

./whip-client -u http://localhost:7080/whip/endpoint/test -t verysecret -A "audiotestsrc is-live=true wave=red-noise ! audioconvert ! audioresample ! queue ! opusenc ! rtpopuspay pt=100 ssrc=1 ! queue ! application/x-rtp,media=audio,encoding-name=OPUS,payload=100" -V "videotestsrc is-live=true pattern=ball ! videoconvert ! queue ! vp8enc deadline=1 ! rtpvp8pay pt=96 ssrc=2 ! queue ! application/x-rtp,media=video,encoding-name=VP8,payload=96"This is one of the examples provided in the client’s README, and very simply creates a pipeline that generates a test audio and video stream, and sends it via WebRTC. The visual output will be a bouncing ball, while the audio will be some red noise. Configuring it to capture webcam/mic or something else would be quite easy, but since this is just a test, test streams are perfectly fine. As soon as the WHIP client has created a stream, we’ll see something like this on Janus:

[rtp-sample] New video stream! (#1, ssrc=1168900077, index 0)

[rtp-sample] New audio stream! (#0, ssrc=1944780569)This is telling us that the default mountpoint (which is indeed called rtp-sample) has detected incoming RTP traffic for both audio and video, which means that the WHIP server managed to automatically redirect the WHIP client’s WebRTC packets to the Streaming mountpoint via plain RTP.

Now the last thing we need to do is check whether or not the WHEP endpoint can actually distribute it to interested WHEP clients. Since we have two clients to test it with (a web-based and a native one), we can open the endpoint URL in both of them:

The bouncing ball and the skull-drilling red noise are there in both clients, which means that the simple test we made did indeed work as expected. Hurrah!

What’s next?

The WHEP proposal is very young, as a first individual draft was only submitted a couple of months ago, and it hasn’t been adopted as a Working Group item yet either. As such, many things could change in the future, both in how the WHEP protocol itself works, or with respect to other proposals that may see the light. That said, there’s many reasons to be excited about the current state of WebRTC broadcasting, and so I’m personally looking forward to see what will happen next, and as usual I’ll try to contribute as much as possible. If you’re also interested in keeping track of how it progresses, make sure you follow the WISH mailing list.

That’s all, folks!

I hope you enjoyed this! It’s a shorter and less detailed read than my usual streams-of-consciousness lengthy posts, but hopefully it managed to interest you enough in what’s indeed an exciting development when it comes to WebRTC-based broadcasting.