One question we often get is: how do I use the SIP and VideoRoom or AudioBridge plugin together? The short answer is that directly you can’t, because of how Janus is conceived. While Janus is indeed modular, and made of different plugins that all refer to the core, its plugins don’t really talk to each other. This means that it’s true that the SIP plugin allows Janus to act as a SIP gateway, but to facilitate WebRTC users to engage with a SIP infrastructure, not add SIP functionality to other Janus plugins.

That said, there are indeed some more “creative” ways to things happen. In this post, I’ll describe a quick and simple way to allow the AudioBridge plugin to work as an audio conference server that SIP users can join too.

AudioBridge? What’s that?

The AudioBridge is a Janus plugin that implements an audio MCU (Multipoint Control Unit) functionality. Since it is an MCU rather than an SFU, this means that all audio streams are mixed rather than relayed as the VideoRoom does. As a consequence, this plugin actually decodes the incoming audio streams, in order to be able to mix them, and then encodes them again so that they can be consumed by WebRTC endpoints. At the time of writing, both Opus and G.711 are supported as codecs.

As a Janus media plugin, it basically acts as an audio mixer and transcoder, implementing the concept of audio rooms that multiple WebRTC participants can join to interact with each other. Since audio is mixed, this means that a single PeerConnection can be used by each participant to both send their contribution and receive the audio from the other participants, no matter how many there are: as such, it can be a valuable component whenever, for instance, available bandwidth may be a challenge. Of course, this does require some additional CPU usage, as mixing and transcoding are more CPU intensive than just relaying packets. That said, the plugin is limited to audio, whose mixing is not very intensive; video is automatically rejected instead. In case video is needed, plugins like the VideoRoom can be used in parallel (which is what we do in our own Virtual Event Platform, for instance), using an SFU approach.

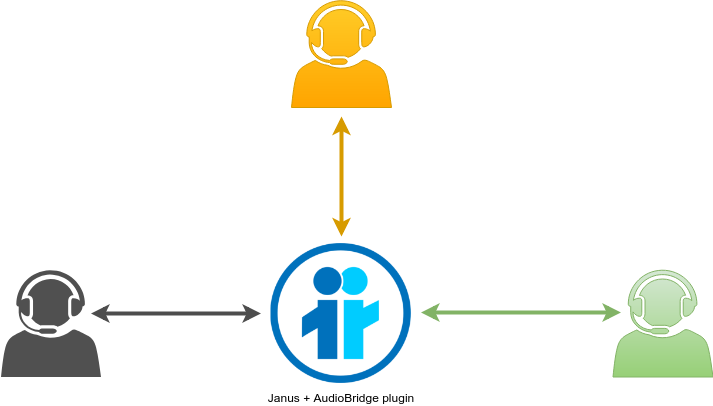

A quick sketch of how the AudioBridge plugin interacts with multiple users can be seen in the image below.

Even though there are three participants in the room, each of them only has a single PeerConnection, which presents each of them with a mix of the other two participants. As an audio MCU, you can see why it’s often considered a potentially attractive alternative for hosting SIP-based audio conferences: while WebRTC has taken the RTC world by storm, SIP-based communication platforms are still very much widespread, and so are SIP endpoints.

That said, out of the box the AudioBridge plugin can only be accessed via the Janus API, and only using WebRTC as a transport. As such, direct access via SIP is definitely not possible, since the plugin was not conceived that way. There are a couple of ways to make an “indirect” SIP access possible, though:

- for WebRTC compliant SIP endpoints, simply add a SIP/Janus API translation layer, so that the SIP endpoint and Janus can establish a WebRTC PeerConnection;

- use the “plain RTP participants” functionality in the AudioBridge plugin, and add a SIP/Janus API translation layer for signalling.

In this post I’ll talk of the second option, as expecting SIP endpoints to be WebRTC compliant may be too strict of a requirement, while support for basic RTP is something they all have instead.

Plain RTP AudioBridge Participants

As anticipated, originally the AudioBridge exclusively supported WebRTC participation out of the box: this meant that a participant had to have a WebRTC stack in order to join a room and interact with other users. This requirement was loosened in later versions of the plugin, and most importantly in PR 2464, which is where we added support for the so-called “plain RTP participants”.

The concept is fairly simple: adding an integrated support for sending and receiving plain RTP as a way to exchange the media directly in the plugin, rather than relying on the WebRTC-to-RTP functionality the Janus core provides automatically to all plugins. Since at a transcoding/mixing level the plugin already worked on plain RTP (which is what the Janus core provides plugins with, after stripping the WebRTC layers), we only needed to actually facilitate the exchange of RTP packets at the network level, and tie that to the already existing structures we had to handle participants in the plugin. Taking into account the picture we showed before, this allowed the AudioBridge plugin to act as shown in the picture below.

How participants interact and see each other doesn’t really change: what changes is that, while some users will keep on using WebRTC as a way to connect to the AudioBridge, others may use plain RTP instead, of course ensuring audio streams are encoded using codecs the AudioBridge plugin can handle.

Since with plain RTP participants the AudioBridge is directly responsible of the sockets handling the incoming/outgoing streams (whereas with WebRTC streams packets are exchanged via native Janus core callbacks), this means that the plugin also needs to somehow advertise its network address, and be aware of the remote participant address as well. This is currently done in a fairly basic way in the plugin, by basically leveraging a very simplified offer/answer model on top of the AudioBridge API. Specifically, the plugin currently only supports the remote participant advertising their willingness to use plain RTP and sending an “offer” first, in order to provide an “answer” in response. This exchange is done as part of the existing join request, that is the request all AudioBridge users must send in order to join a specific room, and an example is provided in the snippet below:

{

request: "join",

room: 1234,

display: "Plain RTP participant",

rtp: {

ip: "192.168.1.10",

port: 2468,

audiolevel_ext: 1,

payload_type: 111,

fec: true

}

}The rtp object above tells the AudioBridge plugin that the participant will not be using WebRTC (a jsep object would be attached to the request otherwise), but is instead interested in leveraging plain RTP support. In order to do so, the participant also provides some information on themselves, namely the IP port and address they’ll be sending RTP from (and expecting RTP to be sent to), plus some additional info that may be of use. As you can see, it’s basically an incredibly barebone representation of what you could find in an SDP object, but formatted as a quite plain JSON object instead: since only audio is required, and very few features are usually supported, it made sense to simplify the syntax as much as possible. Notice that no codec information is provided here: this is because the codec to use to join a room is something the plugin API already exposes as a different property in the join request.

If the plugin accepts the RTP participant (a room can be configured not to, and the feature is indeed disabled by default), the plugin will answer with its own rtp object, which will include its own properties, e.g.:

{

"audiobridge" : "joined",

"room" : 1234,

"id" : 9375621113,

"display" : "Plain RTP participant",

"participants" : [

// Array of existing participants in the room

],

"rtp": {

"ip": "192.168.1.232",

"port": 10000,

"payload_type": 111

}

}This basically concludes this simplified offer/answer exchange, since as a matter of fact both the participant and the plugin have all the information they need to start exchanging RTP packets. As far as other AudioBridge participants are concerned, nothing changes: they’re notified about this participant just as they’re notified about any other, and they’re actually unaware of the fact this participant is using plain RTP rather than WebRTC to exchange media, since media packets are exactly the same nevertheless.

As you can guess, having plain RTP support and a barebone offer/answer model in place takes us a step forward to facilitating SIP access, but we’re not done yet. In fact, while we’re using something similar to offer/answer, the objects we exchange are not SDPs: and, most importantly, the AudioBridge plugin may now be exposing sockets to exchange RTP packets directly, but no change was made to do the same with SIP based signalling; as a matter of fact, the examples above demonstrate how this plain RTP functionality integration is actually integrated in the Janus/AudioBridge API itself. This means that some sort of translation layer between the AudioBridge API and SIP is still needed to complete the process, in order to bridge signalling as well beyond media.

Janode + Drachtio = ♥

It’s clear that to make this translation layer possible as a server side component, we need two things:

- a Janus API stack;

- a SIP stack.

We’ve already explained how the Janus SIP plugin cannot help here: in fact, the SIP plugin is merely a WebRTC-to-RTP and RTP-to-WebRTC gateway on behalf of other WebRTC users, in order to allow them to engage with SIP based infrastructures, and never actually sees the Janus API. This means that an external SIP stack is needed instead, to facilitate a translation to/from the Janus API and the AudioBridge plugin.

For the Janus API, the choice was easy enough: Janode is a robus implementation of the stack written in node.js, and we use it extensively in many projects, including our own Virtual Event Platform. And since node.js would be the framework I’d use for a simple proof-of-concept (POC), the choice for the SIP stack fell on Dracthtio, as an open source node.js framework for SIP server applications created by Dave Horton. I’d been curious about for a long time, and so this POC gave me an excellent opportunity to tinker with it a bit.

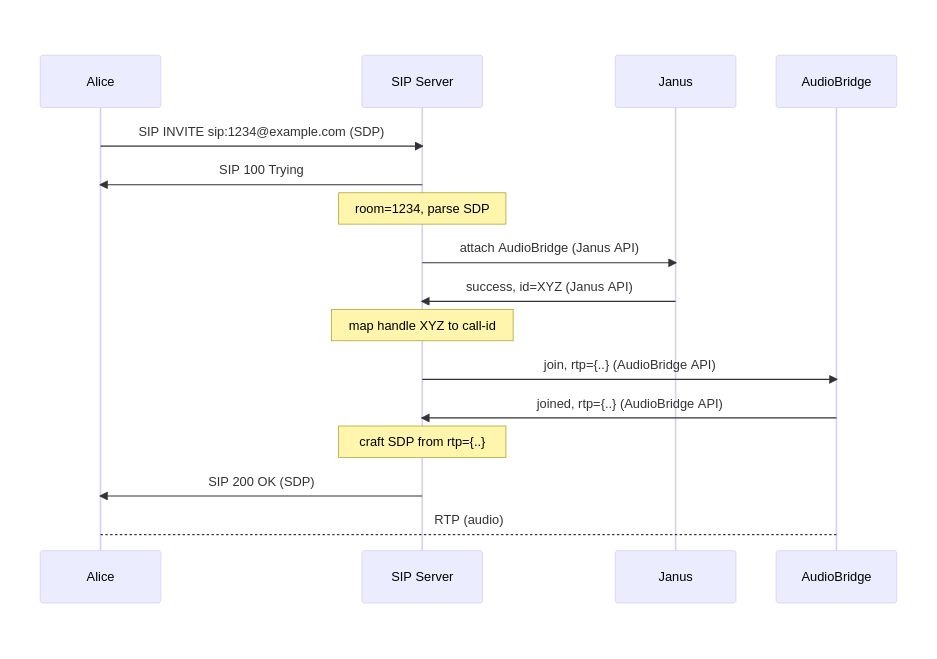

As to the POC itself, I wanted the behaviour to be simple and yet effective, to demonstrate in practice how plain RTP participants could indeed help SIP users join an AudioBridge room. As such, I conceived the POC to work this way:

- a SIP server waits for incoming INVITE messages;

- when an INVITE comes, it extracts the username part of the To header, in order to check which AudioBridge room to join, and parses the SDP to extract the relevant information;

- after that, a handle is attached to the AudioBridge plugin, and a join request is sent with an rtp object containing the info extracted from the SDP above;

- when the response arrives, the rtp object from the AudioBridge plugin is used to craft a “local” SDP, which is then sent back to the SIP called in a 200 OK;

- media starts flowing!

As you can see, nothing too fancy or complex: just a basic translation layer between two different signalling protocols. A visual representation as a sequence diagram is presented below:

Actually implementing this using Janode and Drachtio proved to be quite simple, and took very little lines of code. Please notice, though, that the code makes some assumptions and has basically no error management for the sake of simplicity: you’ll definitely want to revise the code and improve it, in case you want to try and use it for something more serious than a simple POC.

Of course, the first thing we need to do is ensuring we can intercept SIP INVITEs, which is quite easy with Drachtio; the documentation provides a simple example as a reference:

srf.invite((req, res) => {

res.send(486, 'So sorry, busy right now', {

headers: {

'X-Custom-Header': 'because why not?'

}

});

});As explained above, in our case we need to use incoming INVITEs as a trigger for connecting to the AudioBridge plugin, rather than sending a “Busy” back, but the process is basically the same. First of all, we need to process the incoming INVITE, to extract some information we’ll require, e.g.:

srf.invite(async (req, res) => {

// Get info from the INVITE headers

let to = parseUri(req.getParsedHeader('to').uri);

let room = parseInt(to.user);

if(isNaN(room)) {

res.send(404);

return;

}

let from = req.getParsedHeader('from').uri;

let callId = req.getParsedHeader('call-id');As anticipated in the POC description, we use the To header to check which AudioBridge room the SIP endpoint wants to join. In this basic example, we expect numeric identifiers for the room, so if a number is not what we got we send a 404 SIP response back to the caller. We also extract the From and Call-ID headers: the former we’ll use as a display name (other participants in the AudioBridge room will see the SIP URI of the caller), while the latter we use as a way to tie the SIP call to the AudioBridge handle we’ll create shortly.

The next step, as discussed is parsing the SDP offer: in fact, the AudioBridge plugin expects connectivity and session information to be provided as part of a basic rtp object, which means we need to first extract that info from the SDP if we want the AudioBridge to be aware of the connectivity details of the SIP called. Since I’m lazy, I implemented a very basic SDP parser that made a lot of assumptions: needless to say, that’s not what I’d recommend using in production!  But for a dumb POC it does its job, so let’s see how it works:

But for a dumb POC it does its job, so let’s see how it works:

// Parse the SDP

let ip, port, ptype;

let lines = req.body.split('\r\n');

for(let i=0; i<lines.length; i++) {

if(lines[i].indexOf('m=audio ') === 0)

port = parseInt(lines[i].split('m=audio ')[1]);

else if(lines[i].indexOf('c=IN IP4 ') === 0)

ip = lines[i].split('c=IN IP4 ')[1];

else if(lines[i].indexOf('a=rtpmap:') === 0 && lines[i].indexOf(' opus') > 0)

ptype = parseInt(lines[i].split('a=rtpmap:')[1]);

if(ip && port && ptype)

break;

}We’re basically looking for an audio m-line to get the port, a c-line to get the IP address, and the Opus rtpmap to figure out the payload type. Again, a lot of assumptions, but we’re assuming a sunny day scenario here.

Now that we have the connectivity information, we can attach to the AudioBridge plugin, and join the specified room. Assuming a session was already created when first starting the application (and so assuming we’ll use the same session for all calls), this is trivial using Janode, and can also be done in very few lines:

let handle = await session.attach(AudioBridgePlugin)

calls[callId] = { handle: handle };

let details = {

room: room,

display: from,

rtp_participant: {

ip: ip,

port: port,

payload_type: ptype

}

};

let data = await handle.join(details);

let abrtp = data.rtp_participant ? data.rtp_participant : data.rtp;The attach method gets us a handle, and the join request on that handle allows us to send the related request to the AudioBridge plugin. Notice how we’re also mapping the handle we just created to the Call-ID we extracted from the INVITE: we may need that later. As you can see, we’re adding an rtp_participant object to the request, with the info we extracted from the SDP before: this is the syntax Janode expects to send an rtp payload to the plugin. The data object we get back will contain the response from the plugin itself, from which we extract the plugin rtp information. Notice that we check if we should access rtp_participant or rtp in the snippet above: this is because the Janode API was streamlined in 1.6.4, due to an ambiguity in the plain RTP participant usage of the Janode AudioBridge plugin, and so we take into account both newer and older versions of the library that way.

Once we have a response, we can craft an SDP using the info returned by the plugin, so that the SIP caller is aware of the plugin connectivity information for this media session. Again, the code is simplified and makes assumptions, but is good enough for a POC:

// Now let's prepare an SDP to send back to the peer

let mySdp =

'v=0\r\n' +

'o=sip2ab ' + new Date().getTime() + ' 1 IN IP4 1.1.1.1\r\n' +

's=-\r\n' +

't=0 0\r\n' +

'm=audio ' + abrtp.port + ' RTP/AVP ' + abrtp.payload_type + '\r\n' +

'c=IN IP4 ' + abrtp.ip + '\r\n' +

'a=rtpmap:' + abrtp.payload_type + ' opus/48000/2\r\n' +

'a=fmtp:' + abrtp.payload_type + ' maxplaybackrate=48000;stereo=1\r\n';Now that we have an SDP, we can send it back to the caller and accept the INVITE we got. Considering this results in a new SIP dialog, we can use some facilities Drachtio provides to make our life easier: more specifically, we can programmatically tell the library we’ll act as a UAS in this context, so that Drachtio can manage the dialog for us. The simplified code looks like this:

let uas = await srf.createUAS(req, res, { localSdp: mySdp });

console.log(`Dialog established, remote URI is ${uas.remote.uri}`);

uas.on('destroy', async () => {

console.log('Caller hung up');

let call = calls[callId];

if(call && call.handle)

await call.handle.detach();

delete calls[callId];

});We’re creating a new UAS based on the INVITE request we got, and telling it to use the SDP we just crafted for the response to send back. We also intercept the destroy event, which is invoked by Drachtio when the dialog is torn down (e.g., in response to a BYE): we use that callback to consequently also detach the associated AudioBridge handle, since when the SIP caller goes away, so should the plain RTP participant we created on their behalf.

That’s basically it! As you can see, the translation layer only took a few lines of code. Again, very basic, since it makes a lot of assumptions and there’s basically no error management at all, but we’re only using it to do a functional test: making it more robust wouldn’t probably take that many more lines anyway.

To see if and how that works in practice, we can make use of existing Janus demos for the job. Let’s start by having a “regular” WebRTC participant join the default AudioBridge demo:

As a SIP endpoint to use with the POC, we can just use the Janus SIP plugin itself too: in fact, even if we’re using a browser, the end result is the SIP plugin creating a SIP dialog on our behalf, and using plain RTP for media on the SIP side. Any other SIP endpoint should work, though, as long as they support symmetric RTP. In our example, let’s use sip:alice@192.168.1.232 as our own URI, and then try to place a call to the sip:1234@192.168.1.232 address, which as explained above should add us to the AudioBridge room 1234:

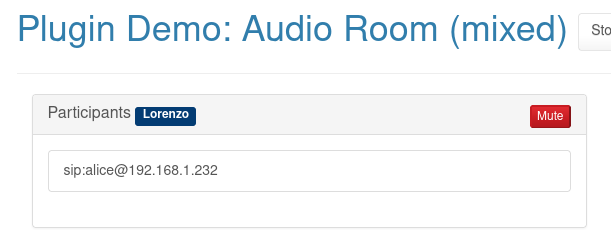

The POC server made of Dracthio and Janode will intercept that INVITE, and add us to the AudioBridge room. Looking at the AudioBridge demo page once more, it will show something like this:

Our SIP endpoint is now properly listed as a participant in the AudioBridge room, and audio flows in both directions too: mission accomplished!

That’s great! Can I do the same with the VideoRoom?

Not really, no… or at least not entirely. Getting the AudioBridge and SIP endpoints to work together is definitely made easier by the fact that the AudioBridge plugin uses a single PeerConnection to do everything, both sending your media and receiving a mix of everyone else. The same cannot be said for the VideoRoom plugin, instead, which uses multiple PeerConnections for the job, in a publish/subscribe approach. Even using the multistream version of Janus, there’s still two different PeerConnections involved at minimum, in case of active participation, since one is used just for sending, and one only for receiving, but in that case multiple streams are bundled in the same PeerConnection, which is something most SIP endpoints will not support anyway.

This means that, in such a case, you’d need an external compoment to take care of mixing the SFU streams for the SIP endpoints benefit, so something that goes beyond just translating the signalling layer. There are a couple of possible approaches here, shown in the two pictures below:

These images come from a presentation I made a couple of years ago, called “Can SFUs and MCUs be friends?“, and sketched a couple of ideas on how to bridge a Janus-based SFU to a legacy infrastructure instead. In both cases, it’s assumed that there’s an external component that takes care of video mixing. The two diagrams have a key difference, though:

- in the image on the left, all video streams are mixed, both SFU streams and SIP streams: this means that SIP endpoints see a mix of all participants (WebRTC and SIP), while WebRTC endpoints see all SIP endoints in a single mixed video, whereas they can still subscribe to other WebRTC endpoints separately;

- in the image on the right, streams are only mixed on the SIP side: this means that SIP endpoints see a mix of all participants (WebRTC and SIP), while WebRTC users get to subscribe to SIP endpoints separately just as they do for WebRTC endpoints.

That said, while this is something we’ve helped several companies on in the past, it’s a quote large topic itself, and is out of scope to this blog post. If you want to learn more, you can check the presentation I linked above; we also had a couple of very interesting presentations by Luca Pradovera and Giacomo Vacca at JanusCon, a couple of years ago, that proposed alternative approaches.

What about cascading AudioBridge instances?

At a first glance, it may look like this plain RTP functionality in the AudioBridge plugin also opens the door to an interesting side effect, that is the ability to cascade AudioBridge instances: cascading is basically the idea that, if you have 20 users to mix, you can mix 10 in one room, 10 in another, and then “join” the two different rooms together: this way, rather than have a single room mixing 20 participants (which can be CPU intensive), you have two only mixing 11 (the 10 participants, plus the other room which is seen as a participant), which ostensibly takes less resources.

That said, while that’s something we’d indeed like to do soon, that’s not currently possible with the implementation of plain RTP participants available at the time of writing. In fact, as anticipated before, this feature currently relies on the fact that the AudioBridge always expects the remote participant to take the initiative, and provide their own RTP address first: considering that to perform cascading, both AudioBridge instances would be a participant to the other, both waiting for the other to take the initiative would simply not get us anywhere. Adding the ability to also have the AudioBridge send an “offer” first would help break this impasse, so that’s part of the planned improvements on the plugin for the future.

That said, cascading is indeed already possibly at the WebRTC side. In fact, for WebRTC participants the AudioBridge plugin already supports a mode where it’s the plugin that generates an offer, rather than waiting for one from the participant (which is the default). As such, orchestrating a set of Janus API calls so that one AudioBridge instance offers and the other answers would allow both to establish a WebRTC PeerConnection with each other, and exchange the respective audio mixes.

That’s all, folks!

I hope you enjoyed this short practical journey in the Janus+SIP world! As you can see from the snippets above, the code needed to get Janode and Drachtio to cover the POC requirements was really short, so in case you’re interested in tinkering with them yourself, I definitely encourage you to try and setup a small testbed of your own.