This pandemic we’ve all been living in these past few months forced many people and companies to change their daily patterns. Most of us have been forced by circumstances to stay and work from home for long periods of time. This clearly had an impact on the broadcasting industry in general. Just think, for instance, of interviews or any kind of live interaction in News or Talk Shows: without the ability to have guests (and often even hosts!) in the studios, production teams were forced to find solutions that would still allow them to do their job.

This may seem a relatively straightforward answer to us folks working in the real-time business. In practice, it’s really not that easy, and most comes from how production teams actually work with streams they need to edit and broadcast, and the tools they employ. As it often happens, I was actually enlightened by the smart people we interact with every day on Twitter. In particular, Dan Jenkins from Nimble Ape (and mastermind behind the amazing CommCon events, make sure you watch the latest Virtual edition that just ended!) made an interesting observation related to BBC News, and Sam Machin’s answer was revealing to me, as it introduced a new “player” I wasn’t aware of:

This was the first time I ever heard of NDI, and it was kind of a revelation: it reminded me how some choices are often driven by how easy they make it to keep on using your existing workflow. This sparkled my interest, and led me to contribute to the discussion, where I wondered how easy (or hard!) it could be to perform an RTP-to-NDI translation of sorts:

The mention of OBS was quite interesting as well, since our friends at CoSMo Software actually did implement a version of OBS capable of talking WebRTC. Unfortunately, this turned out to be a red herring, since as Dr. Alex clarified, OBS-WebRTC doesn’t support WebRTC input, which is what could have helped translating the incoming stream to NDI from there:

I was really curious to investigate NDI as a technology after these exchanges (especially considering I knew nothing about it), but then life and work got in the way, and I forgot about it.

A few weeks ago, though, a private chat with Dan sparkled that interest again. He mentioned how a video production company he knew was really struggling with getting proper live feeds from the Internet to be used in what they were producing every day. Once more, NDI was mentioned as pretty much a standard de facto in that line of business, and how a proper way to bring in WebRTC streams is still sorely missing, which forces editors to basically capture the source material in “hacky” ways.

As such, I decided to start looking into NDI, to figure out if the RTP-to-NDI translation I wondered about was indeed feasible or not.

First of all, what’s NDI?

Quoting Wikipedia (because I’m lazy):

Network Device Interface (NDI) is a royalty-free software standard developed by NewTek to enable video-compatible products to communicate, deliver, and receive broadcast-quality video in a high-quality, low-latency manner that is frame-accurate and suitable for switching in a live production environment.

In a nutshell (and probably oversimplifying it a bit), it allows for a live exchange of multichannel and uncompressed media streams within the same LAN, taking advantage of mDNS for service discovery. This allows producers to consume media from different sources in the same LAN, i.e., without being limited to the devices physically attached to their setups. The following image (which comes from their promotional material) makes the whole process a bit clearer from a visual perspective.

Apparently, the real strength behind the technology are the royalty free SDK they provide, and the wide set of tools that can actually interact with NDI media: once a stream, no matter the source, becomes available, it’s easy to cut/manipulate/process in order to have it embedded in a more complex production environment. Because of this, NDI is actually very wildly deployed in many media production companies.

Which brings us to the key issue: if this is the way remote interviews are produced as well, producers will likely try to use whatever makes it easy for them to get an NDI source that can use directly. Apparently, WebRTC is still hard for them to work with: without a way to directly pull a WebRTC feed in, they’re basically forced to use workarounds like capturing the browser window rendering the remote video and cropping what they need using an NDI recorder, and inject the result that way; for audio, it might mean recording the system output or similar hacks.

Needless to say, this is a suboptimal solution that requires more work than should be needed: besides, it can be even more challenging if the web page that renders the streams has dynamic interfaces that could move the part you’re interested in around, or even limited functionality (e.g., no screen sharing support). That’s exactly why I started looking into ways to get RTP more or less directly translated to NDI, in order to make the whole process much easier to work with instead.

An RTP/NDI gateway

The first thing I checked was if there was already some RTP/NDI implementation available, and the answer was yes and no. The first project I found was an open source plugin, called gst-plugin-ndi: this project is quite interesting, but unfortunately only covers the NDI reception, and not delivery. As I’ll explain later, this still proved quite helpful to validate my efforts, but it was not what I was looking for. A quick research on any FFmpeg integration let me to this ticket, which explained how FFmpeg did indeed support NDI up until some time ago, but then dropped it entirely after a copyright infringement issue by Newtek. As such, not an option either.

The next step, then, was looking into ways to generate NDI content myself and, luckily enough, this was quite straightforward. In fact, the NDI SDK is royalty free, and can be downloaded free of charge on their website (a link is sent to a mail address you provide). Once you download the SDK, you have access to a good documentation and plenty of examples. I went through some of the examples that showed how to send audio and video, and they seemed simple enough: in fact, for video NDI expects uncompressed frames in a few different supported formats, while for audio the uncompressed frames can be either 16pp or FLTP (floating point, which they prefer).

Considering the nature of the WebRTC media, I decided to write a small RTP receiver application (called rtp2ndi in a brilliant spike of creativity) that could then depacketize and decode audio and video packets to a format NDI liked: more specifically, I used libopus to decode the audio packets, and libavcodec to decode video instead (limiting to VP8 only for the sake of simplicity). As for the NDI formats, I went with UYVY for video frames, and 48khz 16pp for audio (which, although FLTP is preferred, the NDI SDK still supports anyway).

To test this, I needed two different things:

- something to feed audio and video frames via RTP to my tool;

- something to receive the translated streams via NDI.

For the former, I used one of many streaming scripts I often use for testing purposes, and specifically a simple gstreamer pipeline that goes through a pre-recorded file and sends it via RTP. For the latter, I used the above mentioned gst-plugin-ndi project, which provided me with a simple way to render the incoming feed in a window.

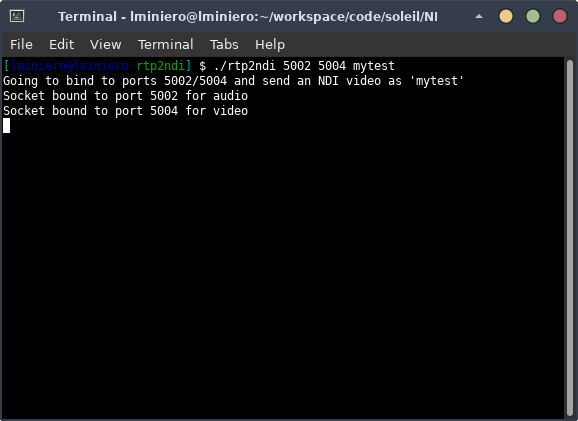

As such, I started by launching my rtp2ndi tool, binding it to a couple of ports to receive RTP packets, and specifying a name to use for the NDI stream:

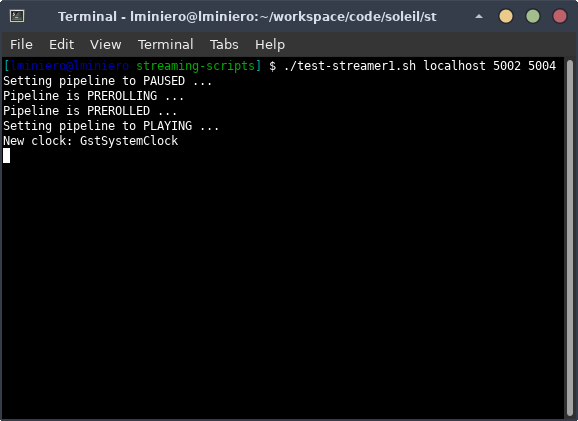

Once the RTP receiver was in place, I started the script to feed it with packets:

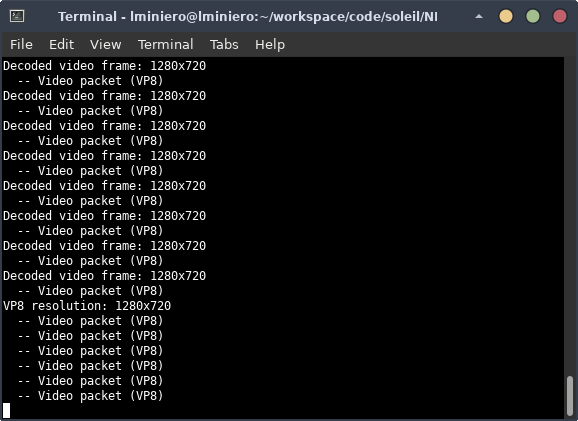

This resulted in my RTP receiver to start receiving and decoding packets, as expected, and translating them to NDI in the process:

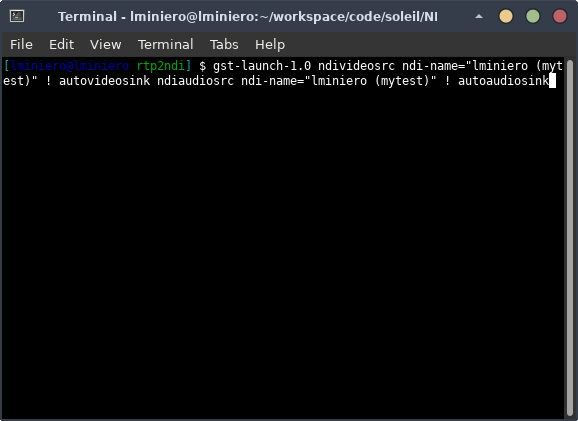

At this point, all I had to do was start the gstreamer pipeline to actually receive the NDI stream and display it:

And voilà! One of my favourite SNL clips in all its beauty!

At this point, I had a good starting point, so the next step was trying to bring WebRTC in the picture. Unsurprisingly, I went with Janus for the job…

Bringing WebRTC (and Janus) in the picture

A relatively easy way to test WebRTC sources, instead of scripts sending SNL clips, could have been the usual RTP forwarders: as I’ve said on multiple occasions (including my CommCon presentation just a few days ago), we use them A LOT, and wouldn’t be out of place here either. It would simply be a matter of creating a VideoRoom publisher, RTP-forward them to the rtp2ndi tool, and the job would be done. For a few reasons I’ll get in later, though, I wanted something more integrated, and something that could be used on its own.

This led me to write a new Janus plugin that would allow a WebRTC user to:

- publish audio and/or video via WebRTC through Janus;

- decode the published streams;

- translate them to NDI.

In a nutshell, I wanted to combine the injection part the gstreamer pipeline provided, take advantage of the integrated RTP server functionality in the Janus core for receiving the packets, and embed the RTP-to-NDI process from rtp2ndi in this new plugin. This was a quite straightforward process, so I came up with a simple demo page that would allow a web user to insert a name for the NDI sender, and then setup a sendonly PeerConnection with Janus as several other plugins do already:

Negotiating a new PeerConnection instructs the plugin to create the related resources, i.e., an Opus decoder, a VP8 decoder, and an NDI sender with the provided name. Once a PeerConnection has been established, the demo looks like this:

As you can see, nothing groundbraking: we’re simply displaying the local stream the browser is capturing and sending to Janus. The Janus core then passes the unencryted audio and video packets to the plugin as usual: the Janus NDI plugin is configured to decode them and relay the uncompressed frames via NDI (exactly as rtp2ndi did before), which means the stream originated via WebRTC is now available to NDI recipients as well, which we can once again confirm via the gstreamer NDI plugin:

This was already quite exciting, but still wasn’t what I was really trying to accomplish. In fact, if you think about it, this does indeed allow Janus to act as a WebRTC/NDI gateway, but doesn’t help much when the Janus server handling the conversation you’re interested in is actually on the Internet, rather than your LAN (which would be almost always the case). If Janus is sitting somewhere in the Internet, e.g., on AWS, there’s not much you can do with the NDI stream created there: actually, there’s nothing you can do at all, as NDI only really makes sense when all the streams are exchanged within the context of your LAN network.

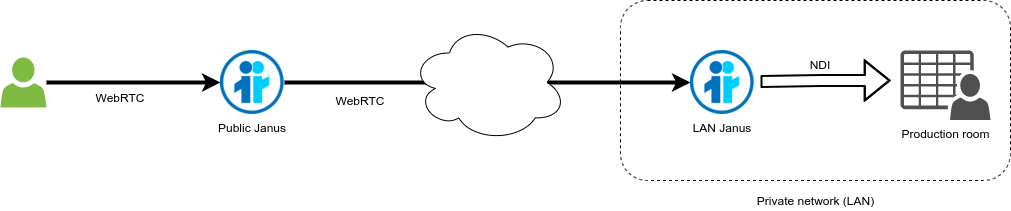

As such, I decided to bring this one more step forward. More specifically, I decided to try and have two Janus instances talk to each other:

- a Janus on the public internet (e.g., the one hosting the online demos);

- a Janus in my LAN.

The purpose was simple: use the Janus in my LAN to consume, via WebRTC, a stream actually served by the Janus on the public internet, so that the local Janus instance might translate that remote feed to an NDI stream I could indeed access locally. Long story short, use my local Janus instance as a WebRTC “client” that receives media from the remote Janus instance, and gateway it to NDI, as the picture below sketches:

While this sounds convoluted and is indeed a bit unconventional, it’s actually not unusual to have Janus instances talk to each other that way. There are people using this trick to inject via WebRTC media coming from one plugin to another, for instance. Getting this to work is usually just a simple matter of orchestrating Janus API calls on both sides so that the SDP offer of one (and possibly candidates, when trickling) is passed to the other, and the SDP answer is sent back accordingly, thus getting the two Janus instances to negotiate a PeerConnection with each other. That’s the beauty of working with a standard like WebRTC!

I decided to try and do exactly that: in particular, I decided to subscribe to the default Streaming mountpoint on the Janus online demos (the Meetecho spot), and use the related offer as a trigger to negotiate a new PeerConnection with my Janus NDI plugin. In fact, Streaming plugin subscriptions are functionally equivalent to VideoRoom (SFU) subscriptions in Janus, which means they’re an easy and yet effective way to validate the deployment.

And a bit of orchestration code later…

Eureka! The video from the remote Streaming mountpoint on the online demos was successfully pulled via WebRTC by the local Janus instance as a subscriber, and correctly translated to NDI as the gstreamer NDI recipient once more confirmed.

That’s all, folks!

I hope you enjoyed this short introduction to what seems, to me, an interesting new opportunity. Of course, this was mostly meant to share some initial efforts in that direction: there’s still work to do, especially considering this hasn’t really been tested in an actual production environment, or with any other NDI tools than the gstreamer NDI plugin, and so some of the assumptions I made may need some tweaking. Should this become more usable in the future, I’ll also consider sharing the code itself.

More importantly, while the way I worked on my proof-of-concept kinda works already, it may be argued it’s not the most optimal way to do things. In fact, as we’ve seen in the previous section, assuming the Janus instance handling the conversation/streams you’re interested in and the Janus instance taking care of the NDI translation are actually different (which would most often be the case), this means getting two Janus instances to establish one or more PeerConnections with each other with the help of an orchestrator. This works, but needing a Janus instance in the production LAN just for the NDI translation process might be seen as kind of overkill.

A potentially better alternative could be creating a new ad-hoc client application able to perform pretty much the same things, that is:

- establish a PeerConnection for the purpose of receiving audio and/or video via WebRTC;

- depacketize and decode the incoming streams;

- translate the decoded streams to NDI.

Most of the complexity, usually, lies in the WebRTC stack, which is why in my proof of concept I stuck with Janus, as it gave me that pretty much for free. That said, there are many options that can be leveraged for that purpose in the open source world: besides, our own Jattack testing application is basically exactly that, and so could be retrofitted to support the additional NDI translation process with a bit of work.

Whatever the appoach, this would allow interested people to just spawn a client side application and point it to the server it needs media from, and would basically give them more control over the whole process. The application may be configured to just connect to a room, for instance, and automatically subscribe to all contributions; or it may be instructed to pick streams coming from different sources; anything that the generic external orchestration mechanism we sketched before provided, in a nutshell, but in a more integrated way. A dedicated application would open the door to additional customizations on the client side too: for instance, rather than having a CLI application running on a server, this application may have its own UI and run on your desktop instead.

Assuming there’s enough interest in this effort, that’s exactly what I plan to focus on next. Maybe not the UI part (I’m really bad with those!), but a dedicated client would indeed be cool to have!