SFU cascading has been a hot topic for quite some time. There’s different reasons why you may want to cascade an SFU implementation: you may want to scale a room so that multiple servers cooperate with each other to distrubute the same streams, for instance, or more simply you may want to ensure that subscribers always get the media from the server that is closest to them, rather than force them to join the instance where the streams are actually originated. Whatever the reason, it’s indeed a useful tool to have in your belt, and so we started working on adding this feature to our own SFU plugin in Janus, the VideoRoom plugin, in a new pull request.

While the work isn’t 100% complete yet, I thought I’d start sharing some details on how it currently works, what can be improved, and what could be added further along the road.

Wait, didn’t Janus have this already?

Yes and no!

We definitely did work a lot on cascading and scalability features in Janus in the past few years, but this always involved more or less creative approaches, that typically involved different plugins and a careful orchestration of how these plugins would be used for the purpose. More precisely, we implemented a behaviour like this using two separate plugins:

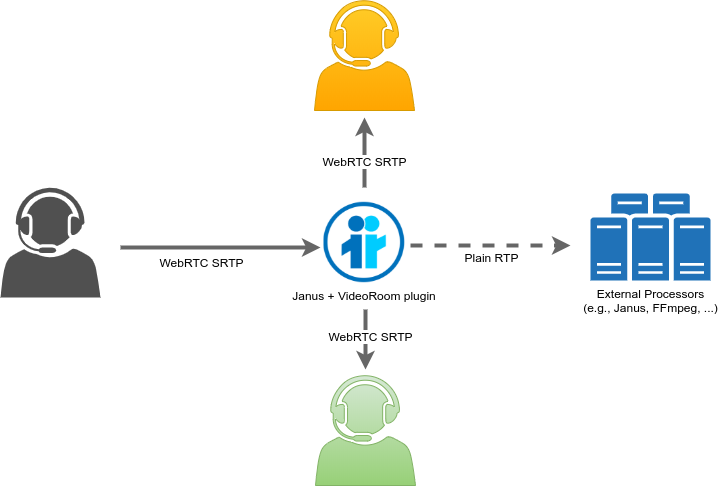

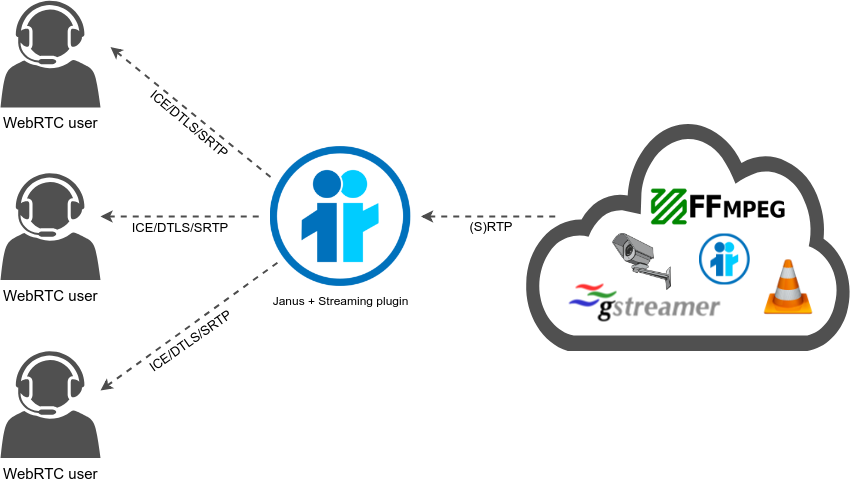

The reason for this was quite simple: the VideoRoom plugin implements SFU functionality in Janus, and as such also provides a simple way for publishing media to Janus via WebRTC. The Streaming plugin, instead, implements broadcasting functionality, and as such is optimized for distributing a more or less static stream to many subscribers at the same time. Considering the Streaming plugin implements rebroadcasting of plain RTP streams via WebRTC (making it an excellent tool to turn streams contributed by applications like FFmpeg or GStreamer to WebRTC), and that the VideoRoom plugin provides a simple way to forward an incoming WebRTC stream to plain RTP instead (thus making it a very flexible tool any time a WebRTC stream must be made available to non-WebRTC compliant applications, e.g., for processing), using the two together is quite straightforward, and allows for an easy decoupling of the source stream from the components that will actually take care of distributing it. The two separate functionality are sketched in the diagrams below to make them simpler to understand.

This means that, if someone is publishing a WebRTC stream via the VideoRoom, we can RTP-forward it to a Streaming plugin instance on a different Janus instance, and the same stream will be available for consumption there as well; do the same with several Streaming plugin instances at the same time, and you’ll have distributed the same single stream across multiple Janus instances, making it easy for subscribers in different regions and/or a wider audience to subscribe to the stream, independently of the Janus instance it is being injected into.

This powerful mechanism is indeed at the basis of several services we provide. Our own Meetecho Virtual Event Platform, for instance, is based on this exact concept: you can find more information on how that’s implemented in practice in different presentations, like this CommCon talk from a couple of years ago, or this overview on how we provide remote participation support for IETF meetings. This mechanism is also at the foundation of our SOLEIL large scale WebRTC broadcasting architecture, which was the topic of my Ph.D a few years ago: again, a more in depth description of what that entails can be found in different presentations I’ve made recently, like this FOSDEM talk from a few months ago. A basic diagram of both application scenarios can be seen below, even though for a better understanding of how they actually work I encourage you to check the presentations linked above.

Now, while this approach is very flexible and powerful, and did allow us to provide considerable scalability functionality to multiple applications (for ourselves and others), it does have a few shortcomings.

First of all, the fact it involves different plugins means that signalling is impacted as a consequence. In fact, if someone is publishing in a VideoRoom instance, and then distributed via Streaming plugin mountpoints, this means you can’t rely on any VideoRoom signalling to be notified, e.g., about publishers joining or leaving a room, but that you have to implement a custom signalling channel for the purpose. This is not a big deal in general (the majority of Janus based applications do provide their own custom signalling, to better control Janus instances as media servers), but it needs to be taken into account.

Another limitation is due to the nature of the Streaming plugin, which is based on the concept of “mountpoints” that are, by definition, quite static. A Streaming plugin mountpoint is indeed a static endpoint that rebroadcasts whatever it receives via RTP to WebRTC subscribers, and is completely independent of the source that is feeding it. This has several advantages, but can be a bit more of a challenge if you indeed want to somehow tie it to the source itself: if a publisher starts as audio-only, and then wants to add video, that’s not something you can do via the Streaming plugin, where the mountpoint must be created and configured in advance, meaning that to accomodate for this you’d have to create an audio-video mountpoint in advance, and only start feeding video if/when it actually becomes available. This has never been a problem for our own use cases, as the Virtual Event Platform handles audio and video through separate channels (audio is mixed via the AudioBridge, and video is relayed through the VideoRoom), but it could be a problem in applications that were designed in a different way.

The main problem of this approach, though, is that it’s very tightly built around how Janus worked up until a few months ago, that is the concept that you could only have up to one audio and/or video stream per PeerConnection: with such a constraint, it’s indeed very easy to associate an audio/video publisher with a series of audio/video Streaming plugin mountpoints, as each source can be handled, and distributed, completely independently of each other. This becomes an issue the moment you want to start taking advantage of the recently merged multistream functionality in Janus, though: if you want to create a single PeerConnection to subscribe to several sources at the same time (sources that may be originated at different Janus instances), this approach doesn’t work anymore, because even though the Streaming plugin does support multistream, it’s quite limited in how that can be used. In fact, as we’ve mentioned before, a Streaming mountpoint is a very static endpoint: you pre-create it in advance with what you expect it will have to distribute, and then it does its job. So how do you deal with the possibility of having to distribute multiple publishers at the same time, and in a dynamic fashion? One possibility may be pre-creating a high number of m-lines in a mountpoint, to account for a possibly higher number of sources, but: (i) this would easily result in a lot of unused m-lines for a possibly long time, (ii) it would put a cap on how many publishers you can indeed distribute (what if there’s more than the available m-lines?), and (iii) it would present considerable challenges in how to re-use the same mountpoint for different subscribers (for subscribers that are also publishers, how do you exclude their own feeds from the mountpoint so that they don’t subscribe to themselves?).

These are not blocking issues, and something can indeed be built around such an architecture nevertheless, but it’s clear that it could make the work a bit harder. For this reason, we started looking into an easier approach instead, one that could implement the concept of cascading within the VideoRoom plugin itself.

Why not simply cascade rooms via WebRTC?

This may seem like the obvious answer, and can work for some use cases, but it’s not a viable solution for all of them for a few different reasons.

First of all, let’s elaborate a bit on what that means in the first place. Cascading participants via WebRTC simply means that we can orchestrate a connection between a participant on Janus #1 and a room on Janus #2 via a WebRTC PeerConnection: simply said, we could orchestrate a Janus-to-Janus PeerConnection, so that a participant on one Janus instance can become a participant on another one as well. In a nutshell, this would mean:

- creating a “fake” subscriber for that participant, so that we can get an SDP offer from Janus #1;

- creating a “fake” publisher on Janus #2, where we provide the offer we just received and get an SDP answer back;

- send back the answer we just received on the “fake” subscriber handle, to complete the negotiation.

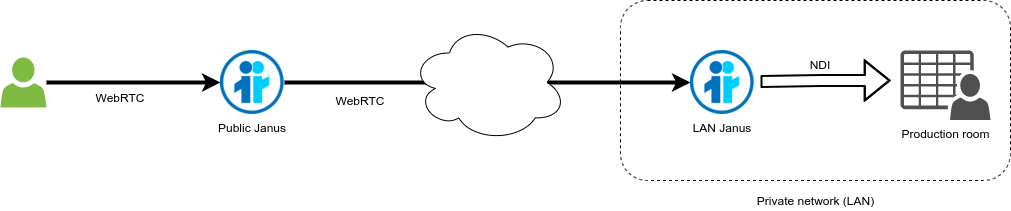

The end result would be a PeerConnection between Janus #1 and Janus #2, where media sent by the source participant is made available as an actual participant in that other room as well. This does indeed work, and as a matter of fact is, for instance, at the basis of our NDI integration process, where a participant joining a public Janus instance is connected to a local Janus instance via a Janus-to-Janus PeerConnection, so that the local Janus instance can turn it to an NDI feed in a local network.

That said, it does have a few shortcomings that need to be taken into account. First of all, while this may work for “simple” streams, it would not work for simulcast. To be more precise, a simulcast participant on Janus #1 would become a “flattened” non-simulcast participant on Janus #2, due to the fact a VideoRoom subscription would act as a channel between the two. In WebRTC, a subscriber to a simulcast publisher will in fact not receive all substreams that are being published, but only the one they’re interested in, or the one they’re capable to receive: this means that the Janus-to-Janus PeerConnection would only distribute one of the substreams (depending on how the subscription is configured), meaning the remotized publisher on Janus #2 would be seen as a plain video publisher instead, with no simulcast capabilities. Again, this is not an issue if the participant was not simulcasting in the first place, but if simulcast is indeed important, being able to preserve the substreams in the cascading process could be very much desirable.

Another potential issue comes from the nature of WebRTC itself. Being able to create a PeerConnection between two Janus instances means they both must be able to interact with a non-traditional WebRTC endpoint in the first place. Most of the times that’s indeed the case, but if both Janus instances are configured as ICE Lite endpoints, for instance, that won’t work: ICE Lite endpoints, in fact, never originate connectivity checks themselves, but only answer incoming ones; if both Janus endpoints expect a connectivity check that the other will never send, you’ll get to an impasse, and no PeerConnection will be created at all.

Finally, while WebRTC provides with a very viable way to transport media, it may indeed be overkill as a solution when you’re cascading a lot of streams on the server side across multiple instances, possibly in a network you have complete control on. One of the reasons why we chose RTP as an intermediate technology for our existing VideoRoom/Streaming scalability infrastructure, is that it makes it very easy to handle, route and process streams using applications and components that may know nothing about WebRTC. A very simple example we often make is multicast, for instance: while with WebRTC you have to create several peer-to-peer connections to relay the same source stream to multiple recipients, if your network supports multicast it’s much easier to use plain RTP instead, where you can just send each packet once on a multicast group, and multiple recipients may receive the same traffic at the same time. You can find some more information on how that can be done for scalability purposes in Janus in this presentation we made at the MBONED Working Group in the IETF.

What about WHIP?

If you’re not familiar with WHIP, we talked about this extensively in previous posts on this blog.

While WHIP is a powerful tool, which will be very helpful in the context of broadcasting, it can’t really help for cascading purposes, at least not in the way we’re imagining it. In fact, its main job is to facilitate WebRTC ingestion, which means it makes it easier to publish any kind of source via WebRTC to a WebRTC server like Janus: we implemented prototypes of both client and server to be used for Janus, and they both work great for the purpose. That said, if the idea is to use WHIP to facilitate cascading a participant in one Janus instance to another, then we’re not doing anything very different from what was described in the previous section, that is creating a Janus-to-Janus PeerConnection, with its pros and cons.

When it comes to ingesting the same external content (e.g., an OBS produced stream) to multiple Janus instances at the same time, then WHIP can indeed help, but again in a way that is orthogonal to the process of cascading actual participants, and using approaches that may be closer to the existing scalability solutions we already have in place (e.g., SOLEIL).

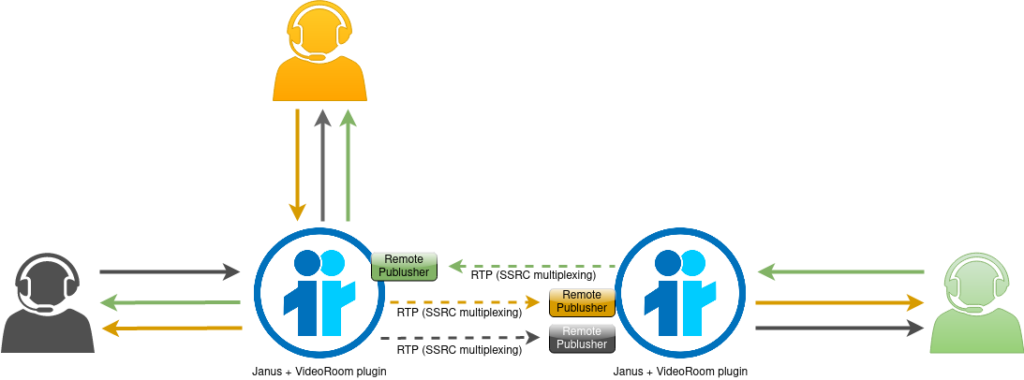

Extending the VideoRoom plugin to support cascading

When we started investigating how we could extend the VideoRoom plugin for cascading purposes, a simple idea that came to mind was the concept of “remote publishers”. In fact, the VideoRoom plugin is based on a publish/subscribe approach, where a stream being published is advertised to other people in the room, meaning they can then decide whether or not to subscribe to it. So far, a stream being published implied a stream being published locally, but it doesn’t really have to be that way: something may be added to the plugin code so that a stream published somewhere else is still advertised as if it was local, meaning the existing VideoRoom API could then be used, without the need for any change, to subscribe to that too. Of course, that only works if that stream published elsewhere is somehow made available here as well, so that the VideoRoom can process and distribute it as if it was indeed local.

This is exactly what we ended up implementing: a so called “remote publisher” that abstracts a media source that is not local to the VideoRoom instance, but is handled as if it was. From an implementation perspective, it doesn’t work very differently from the VideoRoom+Streaming approach we described before, as plain RTP is still used as an intermediate technology between the source (where the stream was published) and the target (where the remote publisher must be advertised). More specifically, the code simply adds something that resembles a Streaming plugin mountpoint within the VideoRoom plugin itself, but with a few key differences:

- First of all, for each remote publisher the plugin binds to a single port for incoming RTP packets, whereas Streaming plugin mountpoints bind to a different port for each m-line. Each incoming stream is demultiplexed out of the SSRC value, where the SSRC itself is computed out of a simple arithmetic formula. This means that it’s very simple to add new streams to an existing remote publisher without having to allocate new ports, thus already making this more flexible than mountpoints, and implicitly “solving” one of the shortcomings we addressed before (mountpoints being static endpoints that can’t grow/shrink beyond what they were created to be).

- This SSRC “arithmetic”, while at the moment quite basic, does take into account simulcast substreams, meaning that each individual substream being contributed can be multiplexed on the same single port introduced above. This does help solving the main shortcoming of the Janus-to-Janus WebRTC cascading we discussed in the previous chapter, where simulcast would be flattened out in the process.

- The characteristics of each media stream associated with a remote publisher are also dynamic, meaning they can be update multiple times during the life cycle of the publisher. This is indeed of paramount importance, since a publisher may change the media they’re sending during a session, e.g., starting audio only, then adding a video stream, then adding another video stream, removing something, and so on and so forth. All these updates to a publisher should be notified to other participants in the room, so that subscriptions can be created/updated accordingly (e.g., turn an existing m-line to inactive where a stream now no longer available was advertised, or offering new m-lines for new media now available). This is again a big step forward compared to the VideoRoom/Streaming approach, as it means such a remote publisher is indeed not a static resource at all, but can be updated dynamically during the course of a session to reflect changes at the source.

- Most importantly, this “fake” mountpoint, living within the VideoRoom plugin, acts as an actual publisher within the context of a room, which means all the existing API in the VideoRoom that involve publishers work with these “remote publishers” as well. While an important benefit of this is that remote publishers are notified within the context of the VideoRoom API itself (which masks the fact that the source of the stream is actually remote), the most important added value of such a functionality is the fact that remote publishers can be subscribed to within the context of the VideoRoom plugin as well: this means that a multistream subscription can now contain publishers that are both local and remote, and most importantly this multistream subscription can also be updated dynamically as publishers (whether they’re local or remote) come and go.

A simplified diagram that shows how this works from a media exchange perspective, all while keeping it transparent to the involved participants, is provided below.

Now, it’s important to point out that, while this already works quite nicely in the existing pull request, this is not fully automated (yet). More specifically, creating a remote publisher requires an external orchestration of the following steps:

- The first step is invoking

add_remote_publisheron the target Janus VideoRoom instance: this is the API by which you can actually create a remote publisher instance within a VideoRoom, by providing a list of streams that should be advertised. The result will be the VideoRoom plugin creating the internal pseudo-mountoint, meaning it will bind to a UDP port for receiving RTP traffic. When this request has been processed, the remote publisher is immediately available, meaning its presence will be notified to other participants in the room just as if a PeerConnection had just become available, even in the absence of actual RTP traffic. - Once a remote publisher is listening on the target instance, the second step is invoking

publish_remotelyon the source instance instead, that is, where the publisher is physically connected. This request only expects connectivity info on the target (e.g., IP address and port), and the plugin will automatically create RTP forwarders for each of the published streams, performing the correct arithmetic for setting the outgoing SSRCs for multiplexing purposes.

To start, this is all that’s needed: the end result will be participants on the target being notified about a new (remote) publisher, and receiving the related media streams in their subscriptions if they subscribed.

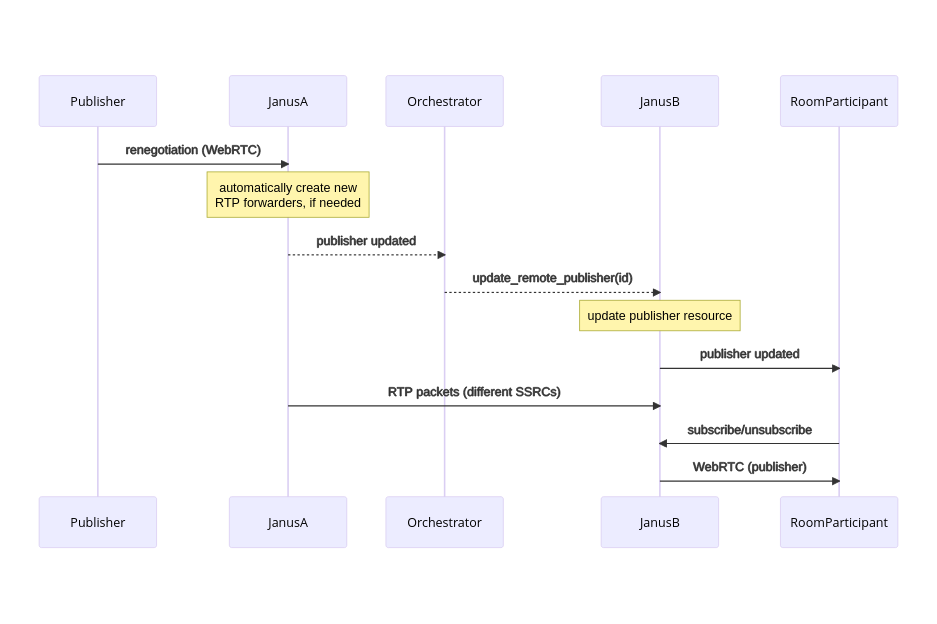

Updating a publisher works in a similar way, even though it’s actually easier. In fact, if a renegotiation on the publisher side results in any stream being added or removed, no API needs to be invoked on the source side: in fact, in case new streams are added, new RTP forwarders (with the right SSRC) are automatically created on the source side for all configured targets, meaning media is automatically sent to the right place; streams being removed automatically result in no traffic for the related m-lines to be forwarded either. That said, the target does need to be updated with respect to changes on the publisher at the source side, otherwise incoming packets for m-lines it is not aware of will be dropped for instance: to do that, an API called update_remote_publisher can be used for the purpose, to instruct the target VideoRoom instance about any change that occurred (new or removed streams, change in descriptions, etc.). This may result in such changes to be advertised to other participants in the room as well, so that they can decide whether to subscribe to, or unsubscribe from, any of those streams.

Finally, getting rid of a remote publisher typically does involve both source and target, but doesn’t always have to. First of all, to configure a source Janus instance to stop forwarding media to a specific remote publisher is simple, and can be done by calling an API called unpublish_remotely: this request will automatically destroy all RTP forwarders that were created for the job, and will also instruct the plugin not to automatically create new forwarders for that target should a new renegotiation occur. Notice that calling this API isn’t needed when getting rid of a publisher handle, as resources are cleaned up automatically in this case, while the same won’t happen if just tearing down the PeerConnection. It’s also important to point out this won’t result in the remote publisher to be also destroyed on the target instance, though: in fact, the relation between source and target is very loose, and exclusively based on the exchange of RTP/RTCP packets, and RTP packets not being delivered should not be considered as an indication that the publisher went away, but simply that ingestion may be paused; besides, there may be interest in keeping a remote publisher instance alive independently of whether the publisher is there at all, e.g., to account for reconnections, or to possibly reuse the same remote publisher for different publishers with similar characteristics at different points in time. For all of the above reasons, there is an additional API for explicitly getting rid of a remote publisher, called remove_remote_publisher: this request destroys the internal pseudo-mountpoint, and removes all the resources allocated for the remote publisher as well, meaning all other participants in the room are notified about this, just as if a local publisher just torn down their PeerConnection; as a result, participants may decide to unsubscribe to any of the streams they added to receive that remote publisher.

Why not something completely automated?

When we started brainstorming how this could work, that’s something that we did indeed think about: could this be something completely automated, with different VideoRoom instances talking to each other somehow and synchronizing? The decision we came up to was that, while that’s something we won’t rule out for the future, it was easier to start this way, with new ad-hoc APIs to start playing with this new feature. There were actually several reasons why we decided to go that way:

- First of all, it’s not completely different from how we were performing scalability before: as discussed, the VideoRoom integration is similar to (even though not exactly the same as) how Streaming mountpoints work, which means a transition would be relatively easy, and the same considerations we often rely upon in terms of orchestration or RTP-related deployments can be followed exactly the same way.

- Cascading streams and how the cascading signalling works are related, but not the same thing: since we wanted to experiment with the media integration first and foremost, this looser integration as far as API are concerned makes it easy to experiment and prototype different solutions. Choosing an automated inter-VideoRoom signalling from the get go would likely have added constraints that might have needed to be changed later.

- An automated integration normally means that any single stream is remotized across the instances that are part of the “cluster”, which may not always be desirable: for instance, you may want to remotize a single stream (e.g., one that must be visible to everyone across servers), but not all of them (e.g., to allow for smaller local rooms to chat about the shared remote stream), and that would be hard to encode as a set of rules in a flexible way.

As such, we decided to keep it simple and leverage a set of APIs instead. As we’ve seen from the sequence diagrams above, it’s nothing transcendental, and the APIs are quite straightforward to understand as far as when to invoke them and how. Besides, we explicitly designed these new APIs to be synchronous requests, which means they’re easy to use outside of the context of Janus sessions, e.g., via Admin API calls, which makes them easy to script and orchestrate. Of course, some state management still needs to be performed (e.g., to track how many targets exist for the same source, especially in between updates), but nothing truly different from what orchestration components typically need to perform anyway.

That said, as anticipated we’re not explicitly ruling out the possibility that in the future there may be some automated mechanism to perform the same set of operations. This could be implemented as some form of static association (e.g., “distribute room 1234 on servers A, B and C”), or something more dynamic instead (e.g., via a DHT or something similar). Again, a lot will depend on whether or not the distribution we’ve envisaged in this first effort will change, and on the most common usages people will make of this cascading functionality, since common patterns may suggest one mode of operation over others when it comes to making a choice. Until then, a manual orchestration as the one we added should provide enough flexibility to implement different use cases without too much of a hassle.

That’s all great! Is there anything missing?

As far as media distribution is concerned, the implementation can be considered more or less functionally complete: we did make some tests (also including simulcast) and they seemed to work as expected, which was very good to know. There are a few things to still iron out, though, plus a few other things that it may make sense to add before this feature is merged upstream.

- At the time of writing, data channels don’t work across remote publishers. This is because of how we currently rely on SSRCs to demultiplex traffic on a single RTP port: since data channels don’t use RTP, SSRCs can’t be used there, meaning we can’t demultiplex traffic properly. A simple approach may be “relay anything you don’t recognize as RTP via data channels”, but that may be frail and prone to abuse. Another possible approach may be prefixing a data channel payload with an RTP header wihere we can put the SSRC we need, but that may risk getting the packet to exceed the MTU due to the RTP header overhead. We haven’t decided a good way forward yet, so for the moment data channels can be negotiated but not cascaded.

- RTP extensions don’t currently work with remote publishers either. Due to the way the core works, extensions are automatically stripped from RTP packets a plugin tries to send to users, meaning it’s up to plugins to fill in a struct with the extension properties to set in outgoing packets, and the core will actually add them to packets. Considering this remotization of publishers relies on a plain RTP intermediate layer, extensions may indeed be forwarded too, but they’ll be filtered out by the core. In order to make extensions work, the plugin would as a consequence need to parse RTP extensions on the way in, and turn them to the struct format the core expects internally. This is definitely doable, but requires a bit of work, which is why for the sake of simplicity we decided to just skip extensions entirely in this first integration.

- While we haven’t mentioned this explicitly, the remotization of remote publishers does take into account, in a limited way, RTCP as well. The way RTCP works is very similar to how it works in the Streaming plugin, with a socket that expects latching from a client to be able to then send RTCP feedback (e.g., a PLI to request a new keyframe) back to the actual source. This works fine when there’s a single video being published, but may not work as well when the publisher is actually sending more than one video at the same time, since the single RTCP socket we use currently does not address SSRC multiplexing the same way as the RTP socket does. This is something we plan to address before merging the branch upstream.

- As discussed, remote publishers created on a target are considered exactly as as any other publisher connected locally on the instance: this means that all actions that can be performed on a local publisher (e.g., recording, or RTP forwarding) work on remote publishers as well. That said, this doesn’t extend to a further remotization of a remote publisher, which means that, at the time of writing, you can’t make a new remote publisher out of a remote publisher. The main rationale for this was mostly to simplify the code, but it’s not something we’re against: actually, good chances are that sooner or later we will indeed add this functionality as well, e.g., to further decouple the source of a stream from the nodes that are distributing it across different instances.

That said, none of the above limitations are dealbreakers, meaning the feature can definitely already be used and tested as is. We do plan to keep on improving how it works as we get feedback from users, so any information you can provide from using this feature would be more than welcome, and would help accelerate the development of what’s missing.

What’s next?

As anticipated, despite a few limitations, the feature is basically functionally complete, which means it can already be used for testing. Most of the upcoming activities will involve fine tuning what’s there and work on feedback we receive in the process, as well as working on some of the features that are missing.

An important next step, though, will be addressing a different limitation that currently exists in the VideoRoom plugin, and that’s related to its scalability functionality. Due to the way the internal packet routing works in the VideoRoom, there’s a limit to how many subscribers a single publisher can have: this limitation doesn’t exist in the Streaming plugin, or better yet, it can be worked around using the so-called helper threads to decouple the ingestion from the distribution, which is a feature we use a lot in our own deployments. Considering this remotization of VideoRoom publishers may indeed help not only in geo-distributing participants, but also in scaling a publisher across multiple Janus instances, it would indeed be helpful to add a similar mechanism to the VideoRoom plugin as well. While we won’t add it in this WIP branch (whose main focus is on the remotization of publishers, and not scalability per se), we’ll definitely start working on that too as soon as the existing branch is merged.

That’s all, folks!

I hope you enjoyed this short introduction to a new exciting feature. If what you read sounded interesting, please do give the pull request a try, as feedback would be quite helpful. As we explained, this is just the first step and there’s more to come, so if the possibilities intrigue you, I definitely encourage you to give this new feature a try and give us some feedback on how it may be improved!